Data Wrangling vs Data Cleaning: Know What They Mean & Their Key Differences

Most organizations depend on the quality and accuracy of their data analysis, which depends on thorough data preparation. Raw data is messy, often containing errors, inconsistencies, and irrelevant information as it's collected. It’s not usable in raw form—it needs preparation and organization to get any value out of it.

This is where data wrangling and data cleaning come into play. The terms are sometimes used interchangeably, but they have different purposes and are performed at different stages in the process. In this article, we’ll clarify the differences between data wrangling and data cleaning, explain their roles, and show how they complement each other to prepare data for insightful analysis.

What is data wrangling?

Data wrangling, also called data munging, is the process of mapping and transforming raw data into a usable format to prepare it for analysis. It involves steps that restructure, enrich, and integrate the data, making it easier to discover and analyze. Different from data cleaning, which corrects and removes errors, data wrangling optimizes the data and converts it into the proper format.

The data wrangling process

Data wrangling involves a series of steps to convert the raw data into the state it needs to be for analysis. This process transforms and organizes the data, making it more useful and accessible. We’ve outlined the steps below:

- Discovery: Before collecting it, you have to know what kind of data you need. Discovery identifies the sources, determines their structure, and the content they hold. This is important in determining what data to extract and how to handle it through the wrangling process. Data profiling and exploratory data analysis are common methods in this step.

- Data extraction: Raw data is gathered from databases, files, external APIs, data lakes, web scraping, etc., to collect all the relevant data that’s needed for analysis. The data sources can be on-premises, in the cloud, or both.

- Data cleaning: While data cleaning is a process within data wrangling, it’s a distinct process by itself, which is why we’re talking about both processes in this article. Data cleaning identifies and corrects errors within the dataset—removing duplicates, correcting errors, and handling missing values. More on this later.

- Data exploration: This step involves examining the data to understand its structure, patterns, and anomalies. It helps identify relationships within the data and potential issues that need to be addressed before analysis.

- Data transformation: Data transformation converts the data into the desired format or structure. This includes normalizing, converting data types, aggregating, and creating new calculated fields. The goal is to make the data consistent and suitable for analysis.

- Data loading: This final step involves loading the transformed data into a destination, like a data warehouse, database, or analytical tool. This step makes the data accessible for analysis and reporting.

Examples of data wrangling

- E-commerce: Data wrangling is used to consolidate e-commerce data from different sources, including customer databases, sales transactions, and web analytics. An e-commerce company might need to pull customer data from a CRM system, sales data from a POS (point of sale) system, and website behavior data from Google Analytics. Data wrangling integrates these disparate data sources, cleans the data by removing duplicates and correcting errors, and transforms it into a unified dataset that provides insights into customer behavior, sales trends, and marketing effectiveness.

- Healthcare: Healthcare organizations use data wrangling to manage patient records, treatment histories, and medical research data. For instance, a hospital might need to integrate patient data from electronic health records (EHRs), lab results from diagnostic systems, and research data from clinical trials. Data wrangling helps to identify and correct missing values and inconsistencies, normalize the data into standard formats, and finally merge it into a comprehensive dataset. This unified dataset can be used to analyze patient outcomes, track treatments' effectiveness, and identify disease progression patterns.

- Financial services: In financial services, data wrangling is an important element of risk management, fraud detection, and regulatory compliance. A financial institution might need to analyze transaction data from banking systems, customer data from CRM systems, and market data from financial databases. In this context, data wrangling removes erroneous transactions, transforms the data into a consistent format, and integrates it into a centralized database. The prepped data is then used to detect fraudulent activities, assess credit risk, and ensure compliance with regulatory requirements.

- Marketing and advertising: Marketing agencies routinely handle data from multiple channels, including social media, email campaigns, and advertising platforms. Data wrangling helps to aggregate data from these disparate sources, clean the data by removing duplicates and correcting errors, and transform it into a format suitable for analysis. This enables marketers to measure campaign performance, understand customer engagement, and optimize marketing strategies based on data-driven insights.

Benefits of data wrangling

- Enhanced data quality: Data wrangling ensures that data is accurate, consistent, and complete. By addressing errors, missing values, and inconsistencies during the wrangling process, the data becomes more reliable for analysis. High-quality data leads to more accurate insights and better decision-making.

- Better data efficiency: Data wrangling helps streamline data preparation by automating repetitive tasks and integrating data from multiple sources. This reduces the time and effort required to prepare data for analysis, allowing data professionals to focus on gaining actionable insights.

- Cost and effort savings: Data wrangling reduces the manual effort of preparing data before it can be analyzed, saving time and resources and lowering costs. This further allows teams to better allocate their resources toward more valuable analysis and strategy.

- Improved data usability: Data wrangling transforms raw data into a structured format, making access and analysis easier and more effective. This enables data scientists and analysts to quickly access and analyze the standardized and enriched data sets without having to check for errors or other anomalies themselves.

- Easier data analysis: Data wrangling preprocesses and organizes data in a way that simplifies analysis. Clean, well-structured data sets reduce the complexity of analytical tasks and improve the accuracy of the results. This facilitates better model building, trend analysis, and data visualization.

What is data cleaning?

Data cleaning, also known as data cleansing, is the process of identifying and correcting errors, inconsistencies, and inaccuracies within a dataset to improve its quality and reliability. For example, duplications, typos, misspellings, or incorrect values, such as variations in how first and last names are recorded (e.g., ‘Rob Jones’ instead of ‘Robert Jones’), can cause data inconsistencies. Data cleaning detects these inconsistencies and errors and adjusts them to the correct value.

The data cleaning process

Each step of the data cleaning process is an important part of ensuring that data is ready for analysis. We’ll break it down here:

- Data inspection: Data inspection involves reviewing the dataset to identify errors, inconsistencies, and missing values. It helps to understand the extent and nature of data quality issues and pinpoint specific problems that need to be addressed to improve the dataset's overall quality.

- Data validation: Checking the data against predefined rules or constraints helps identify invalid entries or numerical values outside of a predetermined format or range and tags them for a particular action. For example, a date that is formatted incorrectly (DD/YY/MM instead of MM/DD/YY or vice versa), a postal code that doesn’t follow the proper format, or transaction amounts that don’t have the decimal in the right place.

- Data correction/deduplication: Data correction fixes errors identified during inspection and validation, like correcting typos and standardizing formats, ensuring data entries are accurate. Deduplication checks for and removes duplicate records so that each entry in the dataset is unique.

- Data standardization: Data standardization ensures that the data is in a consistent format, making it easier to analyze and compare. This process might involve converting all date formats to a unified form, ensuring consistent capitalization for text entries, or standardizing measurement units across the dataset.

- Data transformation: While data transformation is part of data wrangling, it’s also important to data cleaning. Transformation converts data into a format suitable for analysis, which could be normalizing data ranges, aggregating data, or creating new calculated fields. This step helps align the data with subsequent analysis or reporting requirements.

Benefits of data cleaning

- Error elimination: Data cleaning removes inaccuracies and errors from datasets, which can lead to misleading results and incorrect analyses. It ensures that the data is accurate and reliable by identifying and correcting errors, such as typos, duplicate entries, and incorrect values.

- Improved data integrity: Standardizing and correcting data improves the overall integrity of the dataset. Consistent and accurate data helps generate meaningful insights for making informed decisions. High data integrity also facilitates proper data integration and interoperability between different systems and datasets.

- Enhanced decision-making: Clean data provides a solid foundation for accurate analysis, leading to better-informed business decisions and strategies. When decision-makers have access to clean, reliable data, they can confidently develop and implement strategies based on accurate insights, ultimately driving better business outcomes.

- Increased efficiency: By automating and streamlining the data cleaning process, organizations save significant amounts of time and resources that would otherwise be spent on manual data correction. This allows data professionals to focus more on analysis and less on data preparation, speeding up the overall data processing workflow.

- Cost savings: Improved data quality reduces the likelihood of errors and inconsistencies that can lead to costly mistakes and rework. Clean data minimizes the risk of incorrect reporting or misinformed decision-making, which can have financial repercussions.

- Data wrangling vs data cleaning: 5 key differences

To better describe the distinctions between data wrangling and data cleaning, let’s compare their key aspects:

|

Aspect

|

Data Wrangling

|

Data Cleaning

|

|

Definition

|

Transforming and mapping data from one format to another

|

Identifying and correcting errors within the data

|

|

Objective

|

Making data accessible and suitable for analysis

|

Ensuring data accuracy and consistency

|

|

Tasks

|

Extraction, exploration, transformation, loading

|

Inspection, validation, correction, deduplication

|

|

Tools

|

Python, R, Jupyter Notebooks, Trifacta

|

OpenRefine, Talend, Data Ladder, SAS Data Quality

|

|

Iteration

|

Often iterative and cyclical

|

Involves continuous feedback loops

|

How they complement each other

Data wrangling and data cleaning are complementary processes important for preparing high-quality data for analysis. While data cleaning focuses on ensuring the data is accurate and consistent by correcting errors and removing duplicates, data wrangling involves transforming and structuring this clean data to make it suitable for analysis. Typically, data cleaning is an initial step within the broader data wrangling process, ensuring the foundational data is accurate before any transformation and restructuring is performed. This ensures that the final dataset is both reliable and ready for analysis.

Overlapping tasks

There can be some overlap between data wrangling and data cleaning tasks. For example, data transformation, which is part of data wrangling and cleaning, involves converting data into a format usable for analysis. Both processes may include steps like data validation and standardization, highlighting the intertwined nature of these tasks in ensuring high-quality data.

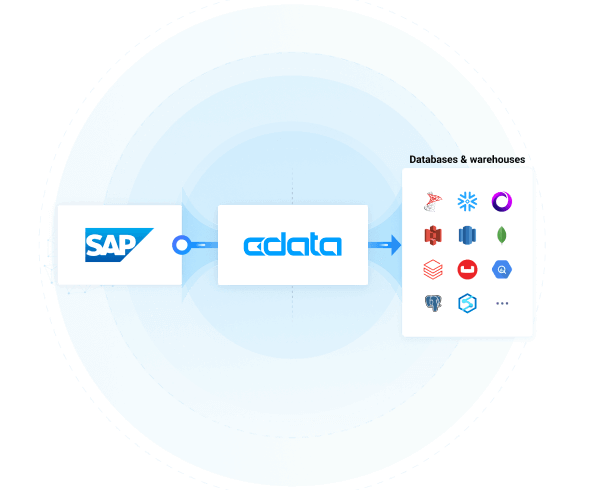

Connect instantly to your favorite data solutions with CData

Whether wrangling or cleaning, CData Connect Cloud simplifies data integration with seamless connectivity to hundreds of cloud applications and data sources—no IT support needed. Enable real-time data access from anywhere, anytime.

Try CData Connect Cloud today

Get a free 30-day trial of CData Connect Cloud to discover how to improve your data management processes with live data connectivity solutions in the cloud.