Dissecting the Modern Data Stack

As the “modern data stack” concept turns a decade old, many data professionals recognize that it should evolve to match today’s data challenges. Mass migration from on-premises to faster, more flexible cloud-first operations challenges the modern data stack with an expanding roster of data silos that technical talent shortages cannot bridge.

To keep data-driven operations adaptable and whole, your organization may start to consider how composable connectivity and self-service development rethink the modern data stack to face today’s business challenges.

Let’s explore the history of the data stack to understand why better architecture is necessary, and how to better manage the modern data stack.

The Evolving Modern Data Stack

Connecting platforms to move data for easy access and fast action at scale are the core challenges for the modern data stack to address.

The legacy data stack was built atop Edgar F. Codd’s invention of a cost-saving database model as businesses adopted relational database management systems (RDBMS). Supporting technologies soon emerged to stack atop the RDBMS for end-to-end data workflows, including the Structured Query Language (SQL), data reporting platforms, and integration tools.

Explosive data growth eventually skyrocketed storage, computing, and cross-format programming costs for on-premises data stacks. Waves of cloud-centric data solutions arrived in response to frame the affordable, lower-maintenance modern data stack — but some on-premises systems are too disruptive to replace.

Today’s data stacks lack clean connectivity across a sea of on-premises and cloud data solutions. Data analysts, scientists, engineers, and automated technologies all use different data solutions across distinct business units.

Business strategy ultimately endures gaps as data users wait on in-house programmers and ad hoc vendor solutions to unite data platforms.

Rather than manage a costly and complicated collection of disparate applications, a new generation of fully managed cloud-first data solutions are lowering the modern data stack’s technical barriers.

Breaking Down the Modern Data Stack

The modern data stack includes various components for ingesting, transforming, storing, and analyzing data. These tools include:

- Data pipelines

- Cloud-based data lakes or data warehouses

- Data transformation tools

- Data analysis tools

Data Pipelines

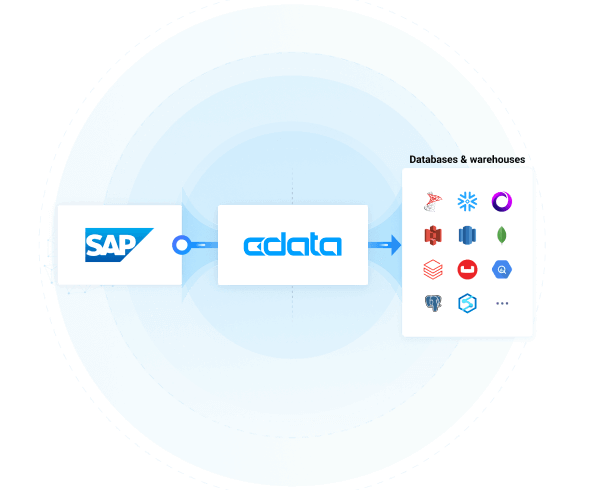

Data pipelines are pathways for raw data to become actionable through efficient, scalable data integration. Pipelines are often enabled by prebuilt connectors to connect multiple data sources as needed without in-house custom coding.

The modern data stack typically uses the extract, load, and transform (ELT) data pipeline, which extracts data from various sources, including transactional databases, APIs, applications, social media, and website logs. The pipeline loads the raw data into a data lake, database, or DWH for on-demand conversion into a format compatible with multiple applications.

Cloud Data Lakes and Data Warehouses

Cloud-based data lakes and data warehouses are locations where data ingestion tools send raw data.

Data lakes are schema-less to allow users to flexibly store data from relational and non-relational data sources. In contrast, legacy warehouses rely on a schema, so they cannot store data from non-relational data sources (i.e. unstructured or semi-structured data).

The cost savings and flexibility of cloud data lakes and warehouses make them immensely popular for the modern data stack. However, they cannot fully replace all relational databases and traditional warehouses, which remain popular for managing data analysis or monolithic applications.

Data Transformation

Once a warehouse or data lake receives raw data, the data must be transformed and unified. Data transformation includes cleaning, filtering, and enrichment to enable subsequent analysis. The modern data stack ingests data from multiple sources, and data transformation tools let the various data types work together.

For instance, standards-based connectivity solutions transform incompatible data formats to be read in analysis tools as if from natively compatible SQL databases. This standardization allows for data to be used in analysis and reporting regardless of where it comes from.

Data Analysis

Data analysis tools enable a variety of visualization and business intelligence solutions. These tools empower users to format clean, aggregated data into visualizations, reports, and dashboards. You can further analyze the information according to your specific needs.

Cloud-based reporting and analytics tools offer better flexibility, collaboration, security, and lower costs than their on-premises counterparts, allowing everyone across the organization to build reports and analyze their data from wherever they are.

The Evolution of the Modern Data Stack

As it stands today, the modern data stack is a haphazard collection of specialized products that were not originally designed to work together. The modern data stack therefore needs modular design to pair these specialized solutions for ingesting, transforming, storing, and analyzing data — while extending to many other categories as well.

Using a standards-based approach to connectivity, your organization can unite the modern data stack into composable architecture that seamlessly interoperates. Three key changes allow connectivity and integration tools to futureproof the modern data stack:

- Composable connectivity reduces in-house coding and maintenance by designing prebuilt, fully managed connections around universal data standards like SQL. This “glue” helps disparate on-premises and cloud data platforms to become plug-and-play data stack building blocks. Unplugging connections is just as easy to make the data stack less dependent on older parts.

- Self-service data access packs composable connections into scalable tools — designed around universally accessible interfaces that seamlessly plug in to a broad range of applications. Non-technical data users can bridge incompatibilities across the data stack and build their own custom solutions.

- Location-agnostic deployment allows these connectivity and integration tools to live in and unite both cloud and on-premises environments. As a result, data movement is no longer restricted by location-based silos.

While the modern data stack’s cloud-based approach provides attractive benefits that traditional monolithic methods lack, it also introduces unique challenges. Your organization must learn to manage a growing roster of sources, destinations, and data delivery tasks across your entire data ecosystem.

Modularizing the Modern Data Stack

With a composable data architecture, the modern data stack can match its gaps with sized-to-fit tools for controllable costs and complexity. Data can transform and move regardless of format or structure — and staff no longer requires special skills to design new data pathways.

CData connectivity solutions simplify how you deploy and manage pipelines across your modern data stack. From prebuilt connectors to real-time source-to-warehouse/lake data replication, and even cloud-based data virtualization, you choose the solutions you need — exactly where you need them.

To learn more about CData’s approach to cloud data solutions and discover the benefits an experienced team offers, reach out to a solutions representative today.