Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Databricks Drivers & Connectors

for Data Integration

Connect to Databricks from reporting tools, databases, and custom applications through standards-based drivers. Easily integrate Databricks data with BI, Reporting, Analytics, ETL Tools, and Custom Solutions.

Connect to Databricks Data from Anywhere!

Easily access live Databricks data from BI, Analytics, Reporting, ETL, & Custom Apps.

BI & Analytics

Our drivers offer the fastest and easiest way to connect real-time Databricks data with BI, analytics, reporting and data visualization technologies. They provide unmatched query performance, comprehensive access to Databricks data and metadata, and seamlessly integrate with your favorite analytics tools.

LEARN MORE: Connectivity for BI & Analytics

Popular BI & Analytics Integrations

ETL, Replication, & Warehousing

From drivers and adapters that extend your favorite ETL tools with Databricks connectivity to ETL/ELT tools for Databricks data integration — our Databricks integration solutions provide robust, reliable, and secure data movement.

Connect your RDBMS or data warehouse with Databricks to facilitate operational reporting, offload queries and increase performance, support data governance initiatives, archive data for disaster recovery, and more.

Popular Data Warehousing Integrations

Data Management

By supporting popular database protocols like ODBC, JDBC, and ADO.NET, as well as wire-protocol interfaces for SQL Server and MySQL, it is easy to connect to and manage Databricks data from a wide range of database management applications.

Popular Data Management Tool Integrations

Workflow & Automation Tools

Connect to Databricks from popular data migration, ESB, iPaaS, and BPM tools.

Our drivers and adapters provide straightforward access to Databricks data from popular applications like BizTalk, MuleSoft, SQL SSIS, Microsoft Flow, Power Apps, Talend, and many more.

Popular Workflow & Automation Tool Integrations

Developer Tools & Technologies

The easiest way to integrate with Databricks from anywhere. Our Databricks drivers offer a data-centric model for Databricks that dramatically simplifies integration — allowing developers to build higher quality applications, faster than ever before. Learn more about the benefits for developers:

- Pragmatic API Integration: from SDKs to Data Drivers

- Data APIs: Gateway to Data Driven Operation & Digital Transformation

Popular Developer Integrations

Data Virtualization

Our drivers provide a virtual database abstraction on top of Databricks data and support popular data virtualization features like query federation through advanced capabilities for query delegation / predicate pushdown.

Learn more about how our tools can be used in popular data virtualization scenarios below:

Popular Data Virtualization Tool Integrations

When Only the Best Databricks Drivers Will Do

See what customers have to say about our products and support.

Unparalleled support ...

Unparalleled support ...

"The support I received was unparalleled to that of any other company I have worked with (and there have been many). Your combination of a first class product with first class support has made this vet [...]"

- M. Wojtowicz

Support Gets Two Thumbs Up ...

Support Gets Two Thumbs Up ...

"Two thumbs up for your support team in my book. I would like to once again give many thanks to your support team for their speedy responses to my emails and for their vast technical skills."

- Pablo G.

No hesitation in recommending you to anybody. ...

No hesitation in recommending you to anybody. ...

"I have recently been in contact with your support which has been offering support in attempting to get the CData ODBC Sage 50 UK driver to working on our PC with Sage. Support has been fantastic and i [...]"

- Jon Sinkins Lorna Wiles Textiles

Folks that know what they are doing. ...

Folks that know what they are doing. ...

"I am very pleased with your ODBC driver for QuickBooks Online and recommended it to another developer down in Florida that purchased both the desktop and server versions of this driver. I am also impr [...]"

- Pete Sass Griffin Computers

Your support is amazing ...

Your support is amazing ...

"You guys are awesome...Really..Support is amazing...Products are fantastic."

- Paul Deraval

Support gets two thumbs up ...

Support gets two thumbs up ...

"Two thumbs up for your support team in my book. I would like to once again give many thanks to your support team for their speedy responses to my emails and for their vast technical skills."

- Pablo G.

Nothing short of fantastic ...

Nothing short of fantastic ...

"Your products are nothing short of fantastic. I've been working with them for almost 5 days and I have my enterprise application completely functioning with your tools."

- Tim Scheutzow

It works beautifully ...

It works beautifully ...

"Thank you so much for your help! You understood exactly what I needed and made it easy for me to implement in my own environment. It works beautifully."

- Dwight Thompson SirsiDynix

WOW!... you helped me in less than 2 minutes ...

WOW!... you helped me in less than 2 minutes ...

"WOW! I called your tech support today, and got a real live person who knew what I was talking about and helped me in less than 2 minutes. Thank you so much!"

- Richard Brown Data West Corporation

Your support is amazing ...

Your support is amazing ...

"You guys are awesome... really... support is amazing... products are fantastic!"

- Paul Deraval

Impressive quality ...

Impressive quality ...

"I am always impressed with the quality of your software and the extremely helpful and professional demeanor of your company."

- P. Catasus

A pleasure to work with ...

A pleasure to work with ...

"It has been a real pleasure to deal with professionals like you, who care about their product and their clients."

- B. McNeill

Unparalleled support ...

Unparalleled support ...

"The support I received was unparalleled to that of any other company I have worked with (and there have been many). Your combination of a first class product with first class support has made this vet [...]"

- M. Wojtowicz

Outstanding products ...

Outstanding products ...

"You have got to be the most jacked up company in the USA - this was the fastest response I've ever had from anyone anywhere! Thank you very much for your support - and for your outstanding products."

- R. Gould-King

Makes QuickBooks integration simple ...

Makes QuickBooks integration simple ...

"Your QuickBooks Adapter is an awesome product which makes Quickbooks integration a simple process. There is a bit of a learning curve and it helps to know more about QuickBooks than I did when I began

[...]"

- Ron Clarke

I love how I can define my own transactions ...

I love how I can define my own transactions ...

"I spent the majority of yesterday experimenting, and this looks great! I dove deep into the .rsd files, and am pretty comfortable with how things work. Very flexible, and I love how I can define my [...]"

- Gerry Miller

Saved us literally weeks of SharePoint development time ...

Saved us literally weeks of SharePoint development time ...

"Our demo license has already saved Jennie and I literally weeks of development time over the native SharePoint methods for doing these types of integrations."

- Michael Schenkel

Support Gets Two Thumbs Up ...

Support Gets Two Thumbs Up ...

"Two thumbs up for your support team in my book. I would like to once again give many thanks to your support team for their speedy responses to my emails and for their vast technical skills."

- Pablo G.

Your product just plain works, and works great! ...

Your product just plain works, and works great! ...

"I've tried a couple other ODBC connectors and they all seem to have some hangups while setting up... except yours. Your product just plain works, and works great at that."

- James Grant Workflow Masters

Quite possibly the best $ I've ever spent. ...

Quite possibly the best $ I've ever spent. ...

"1. Did a year's worth of bank transaction data entry for one client in about two hours - last time I did this for the same client, it was over 15 hours of horrible manual 10-keyed data entry. 2. I [...]"

- Joshua Hartley Guardian Financial Inc.

The CData Google Excel Add-In is a masterpiece ...

The CData Google Excel Add-In is a masterpiece ...

"Your Google Excel Add-In is a masterpiece. I work 6 days a week and keep my calendar on Google Calendar. I have an old desktop and no printer. Getting the Google Calendar exported to Excel helps me im [...]"

- Kumar Iyer

Your product performs flawlessly. ...

Your product performs flawlessly. ...

"I found that the CData Excel Add-In meets my needs the best and I have purchased/licensed that. I’ve already put it to good use in editing and batch-updating Quickbooks tables and it has performed fla [...]"

- Klaus Schmidsrauter

Worked perfectly and was completely hassle free. ...

Worked perfectly and was completely hassle free. ...

"I am using this component to import data from multiple MySQL databases into a SQL Server so I can combine data from both sources to create a variety of SSRS reports. I attempted to do this with the b [...]"

- Robert Clancy Direct Success, Inc.

No hesitation in recommending you to anybody. ...

No hesitation in recommending you to anybody. ...

"I have recently been in contact with your support which has been offering support in attempting to get the CData ODBC Sage 50 UK driver to working on our PC with Sage. Support has been fantastic and i [...]"

- Jon Sinkins Lorna Wiles Textiles

Good support for NetSuite ...

Good support for NetSuite ...

"We have tried using other SSIS packages for NetSuite, and other SuiteTalk-based ETL tools for NetSuite, and have found most of them to have quite poor support for NetSuite, and many issues with connec [...]"

- Scott VonDuhn CommerceHub

Connecting SAS to Salesforce worked brilliantly ...

Connecting SAS to Salesforce worked brilliantly ...

"I have been using the ODBC driver with SAS to connect to Salesforce over the last two days and it has worked brilliantly. It was quick and easy to install. There is very little documentation on the [...]"

- Leonard Scuderi

Your tools are a literal godsend ...

Your tools are a literal godsend ...

"Your tools are a literal godsend... I was all set to build a FreshBooks-Excel connector this weekend until I found yours. Just awesome. Also kudos for great tech support!"

- Jason Williscroft

You guys have hit the nail on the head with this one

...

You guys have hit the nail on the head with this one

...

"I love the ease of use, the simplicity of your product. Trust me I have test driven almost everything on the market. For native integration I think you guys have hit the nail on the head with this one [...]"

- Christopher Haigood McKesson

Whatever you do to get the data out so fast using the QB APIs is awesome! ...

Whatever you do to get the data out so fast using the QB APIs is awesome! ...

"Whatever CDATA do to get the data out so fast using the QB APIs is awesome. It literally takes 3-8 secs to run through the 68K+ orders in our QB file and stage into SQL. It is certainly worth the co [...]"

- Rob Mariani Aquatap Plumbing Pty Ltd

NetSuite Replication - Great Product and Outstanding Support! ...

NetSuite Replication - Great Product and Outstanding Support! ...

"We chose CData Data Sync for NetSuite to replicate our NetSuite cloud data to a local database instance for reporting. Despite our initial inexperience with NetSuite, the CData support people were ext [...]"

- Bill Border Compassion International

Fastest reads of any NoSQL Driver ...

Fastest reads of any NoSQL Driver ...

"I have tested MongoDB drivers from other vendors and so far the [CData ODBC Driver for Mongo] has had the quickest reads."

- Douglas Menger Yupo Corporation

Folks that know what they are doing. ...

Folks that know what they are doing. ...

"I am very pleased with your ODBC driver for QuickBooks Online and recommended it to another developer down in Florida that purchased both the desktop and server versions of this driver. I am also impr [...]"

- Pete Sass Griffin Computers

In a business world void of quality customer service, CData has proven that their customers matter to them! ...

In a business world void of quality customer service, CData has proven that their customers matter to them! ...

"You quickly addressed my questions and helped provide additional guidance which enabled me to see the full benefit of your product. As an accountant who also happens to be a computer geek, I was able

[...]"

- Mathew Fulton

Being able to use standard SQL instead of APIs is awesome! ...

Being able to use standard SQL instead of APIs is awesome! ...

"We liked that any engineers with RDBMS/SQL knowledge could use the CData Drivers without having to learn anything new. Being able to use standard SQL instead of APIs is awesome. As professional engine [...]"

- Takayuki Kobayashi Fujitsu FSAS

A plug and play experience. ...

A plug and play experience. ...

"Integrating with the providers turned out to be a breeze. Thanks to the clean class model and the deep integration into .NET it was like a plug and play experience. Our customers will love this!"

- Jochen Bartlau combit Software

Nothing short of fantastic ...

Nothing short of fantastic ...

"Your products are nothing short of fantastic. I've been working with them for almost 5 days and I have my enterprise application completely functioning with your tools."

- Tim Scheutzow

It works beautifully ...

It works beautifully ...

"Thank you so much for your help! You understood exactly what I needed and made it easy for me to implement in my own environment. It works beautifully."

- Dwight Thompson SirsiDynix

WOW!... ArcESB helped me in less than 2 minutes ...

WOW!... ArcESB helped me in less than 2 minutes ...

"WOW!, I called your tech support today, and got a real live person who knew what I was talking about and helped me in less than 2 minutes. Thank you so much!"

- Richard Brown Data West Corporation

Your support is amazing ...

Your support is amazing ...

"You guys are awesome...Really..Support is amazing...Products are fantastic."

- Paul Deraval

Impressive quality ...

Impressive quality ...

"Thank you so much. I am always impressed with the quality of your software and the extremely helpful and professional demeanor of your company."

- P. Catasus

A pleasure to work with ...

A pleasure to work with ...

"It has been a real pleasure to deal with professionals like you, who care about their product and their clients."

- B. McNeill

Outstanding products ...

Outstanding products ...

"You have got to be the most jacked up company in the USA - this was the fastest response I've ever had from anyone anywhere! Thank you very much for your support - and for your outstanding products."

- R. Gould-King

I love how I can define my own transactions ...

I love how I can define my own transactions ...

"I spent the majority of yesterday experimenting, and this looks great! I dove deep into the .rsd files, and am pretty comfortable with how things work. Very flexible, and I love how I can define my [...]"

- Gerry Miller

Free or Low-Cost AS2 Connectivity ...

Free or Low-Cost AS2 Connectivity ...

"The availability of free or low-cost AS2 connectivity (such as ArcESB) will introduce a new competitive element to the market. Vendors that charge exorbitant prices for AS2 adapters will have to deal [...]"

- L. Frank Kenny Gartner

Cost effective solution for small businesses ...

Cost effective solution for small businesses ...

"ArcESB does its job and is easy to work with. It is a cost effective solution for small businesses which would otherwise be excluded from the benefits of B2B messaging."

- Roger Ford Innovise Ltd.

Support gets two thumbs up ...

Support gets two thumbs up ...

"Two thumbs up for your support team in my book. I would like to once again give many thanks to your support team for their speedy responses to my emails and for their vast technical skills."

- Pablo G.

Setup in under 10 minutes ...

Setup in under 10 minutes ...

"I'm currently working on a OFTP2-based MFT-platform for the Flemish Government, and I was looking for an alternative to Mendelson to be used as a OFTP2-client by our Partners. I've got extensive exper [...]"

- Mario Peel

We're convinced there is NO finer product or support team! ...

We're convinced there is NO finer product or support team! ...

"Without ANY background using AS2 I was able to use the excellent documentation to install it and get it going. When I ran into questions and issues due to my lack of knowledge, the ArcESB support team [...]"

- Emmett Kaericher

Top 1 or 2 percent of best companies to work with. ...

Top 1 or 2 percent of best companies to work with. ...

"I’ve been in the business 20+ years and you guys are in the top 1 or 2 percent of best companies to work with. Your support is just outstanding"

- Bill Border Compassion International

The software is great! ...

The software is great! ...

"The software is great, BTW. I spent close to 16 hours struggling with OpenAS2, before finding ArcESB, which only took around 10-20 minutes."

- Will Mott TST Water

Perfect solution for AS2 ...

Perfect solution for AS2 ...

"The solution works perfectly! It is a great relief to know that I don't need to worry about whether my EMA submissions are being properly and securely handled. Not only is this a great product, but th [...]"

- CEO, PhACT Corporation CEO, PhACT Corporation

Cost efficient, easy to administer, scalable AS2 solution ...

Cost efficient, easy to administer, scalable AS2 solution ...

"ArcESB has provided us with a cost efficient, easy to administer, scalable solution. It is easy-to-use, easy to install and doesn't break the bank. We would absolutely recommend this product to compan [...]"

- Brad Dayhuff Vice President / Co-Owner, Xebec Data Corporation

Frequently Asked Databricks Driver Questions

Learn more about Databricks drivers & connectors for data and analytics integration

How does the Databricks Driver work?

The Databricks driver acts like a bridge that facilitates communication between various applications and Databricks, allowing the application to read data as if it were a relational database. The Databricks driver abstracts the complexities of Databricks APIs, authentication methods, and data types, making it simple for any application to connect to Databricks data in real-time via standard SQL queries.

How is using the Databricks Driver different than connecting to the Databricks API?

Working with a Databricks Driver is different than connecting with Databricks through other means. Databricks API integrations require technical experience from a software developer or IT resources. Additionally, due to the constant evolution of APIs and services, once you build your integration you have to constantly maintain Databricks integration code moving forward.

By comparison, our Databricks Drivers offer codeless access to live Databricks data for both technical and non-technical users alike. Any user can install our drivers and begin working with live Databricks data from any client application. Because our drivers conform to standard data interfaces like ODBC, JDBC, ADO.NET etc. they offer a consistent, maintenance-free interface to Databricks data. We manage all of the complexities of Databricks integration within each driver and deploy updated drivers as systems evolve so your applications continue to run seamlessly.

If you need truly zero-maintenance integration, check out connectivity to Databricks via CData Connect Cloud. With Connect Cloud you can configure all of your data connectivity in one place and connect to Databricks from any of the available Cloud Drivers and Client Applications. Connectivity to Databricks is managed in the cloud, and you never have to worry about installing new drivers when Databricks is updated.

How is a Databricks Driver different than a Databricks connector?

Many organizations draw attention to their library of connectors. After all, data connectivity is a core capability needed for applications to maximize their business value. However, it is essential to understand exactly what you are getting when evaluating connectivity. Some vendors are happy to offer connectors that implement basic proof-of-concept level connectivity. These connectors may highlight the possibilities of working with Databricks, but often only provide a fraction of capability. Finding real value from these connectors usually requires additional IT or development resources.

Unlike these POC-quality connectors, every CData Databricks driver offers full-featured Databricks data connectivity. The CData Databricks drivers support extensive Databricks integration, providing access to all of the Databricks data and meta-data needed by enterprise integration or analytics projects. Each driver contains a powerful embedded SQL engine that offers applications easy and high-performance access to all Databricks data. In addition, our drivers offer robust authentication and security capabilities, allowing users to connect securely across a wide range of enterprise configurations. Compare drivers and connectors to read more about some of the benefits of CData's driver connectivity.

Is Databricks SQL based?

With our drivers and connectors, every data source is essentially SQL-based. The CData Databricks driver contains a full SQL-92 compliant engine that translates standard SQL queries into Databricks API calls dynamically. Queries are parsed and optimized for each data source, pushing down as much of the request to Databricks as possible. Any logic that can not be pushed to Databricks is handled transparently client-side by the driver/connector engine. Ultimately, this means that Databricks looks and acts exactly like a database to any client application or tool. Users can integrate live Databricks connectivity with ANY software solution that can talk to a standard database.

What data can I access with the Databricks driver?

What does Databricks integrate with?

Using the CData Databricks drivers and connectors, Databricks can be easily integrated with almost any application. Any software or technology that can integrate with a database or connect with standards-based drivers like ODBC, JDBC, ADO.NET, etc., can use our drivers for live Databricks data connectivity. Explore some of the more popular Databricks data integrations online.

Additionally, since Databricks supported by CData Connect Cloud, we enable all kinds of new Databricks cloud integrations.

How can I enable Databricks Analytics?

Databricks Analytics and Databricks Cloud BI integration is universally supported for BI and data science. In addition, CData provides native client connectors for popular analytics applications like Power BI, Tableau, and Excel that simplify Databricks data integration. Additionally, native Python connectors are widely available for data science and data engineering projects that integrate seamlessly with popular tools like Pandas, SQLAlchemy, Dash, and Petl.

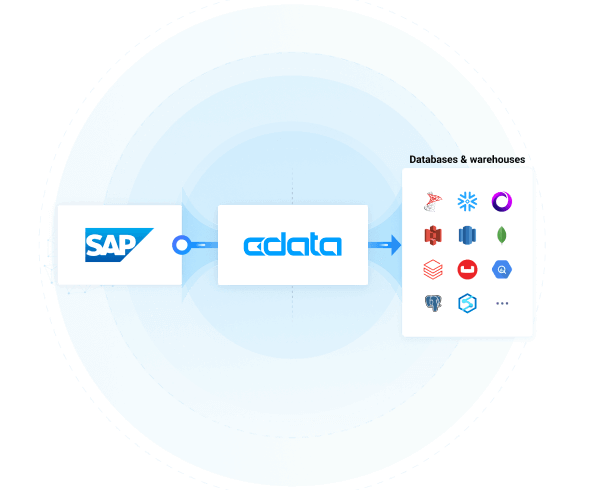

How can I support Databricks Data Integration?

Databricks data integration is typically enabled with CData Sync, a robust any-to-any data pipeline solution that is easy to set up, runs everywhere, and offers comprehensive enterprise-class features for data engineering. CData Sync makes it easy to replicate Databricks data any database or data warehouse, and maintain parity between systems with automated incremental Databricks replication. In addition, our Databricks drivers and connectors can be easily embedded into a wide range of data integration tools to augment existing solutions.

Does Databricks Integrate with Excel?

Absolutely. The best way to integrate Databricks with Excel is by using the CData Connect Cloud Excel Add-In. The Databricks Excel Add-In provides easy Databricks integration directly from Microsoft Excel Desktop, Mac, or Web (Excel 365). Simply configure your connection to Databricks from the easy-to-use cloud interface, and access Databricks just like you would another native Excel data source.

Industry-Leading Support

Support is not just a part of our business, support is our business. Whenever you need help, please consult the resources below:

Using the Databricks drivers

- Bridge Databricks Connectivity with Apache NiFi

- Create Databricks-Connected Business Apps in AppSheet

- Use the CData Software JDBC Driver for Databricks in MicroStrategy

- Databricks Reporting and Star Schemas in OBIEE

- Visualize Databricks in TIBCO Spotfire through ODBC

- Visualize Databricks in Sisense

- Create Data Visualizations Based On Databricks in Mode

- Use the CData Software ODBC Driver for Databricks in MicroStrategy

Related Blog Articles

- Understanding Databricks ETL: A Quick Guide with Examples

- Catch Up With CData at the Databricks Data + AI Summit 2024

- Free Webinar: Integrate Your Enterprise Data with Databricks & CData Sync

- Key Takeaways from Databricks Data & AI Summit 2024

- What is Middleware? Importance, Types & Use Cases

- 3 Takeaways from Snowflake Summit 2023

- Cloud Migration is Impacting the Future of Business

- Exploiting Your Amazon Advertising Data

- Python is Now Fully Integrated into CData Virtuality’s Code Editor!

- CData Arc V21 Released

"You guys are awesome... really... support is amazing... products are fantastic!"

- Paul Deraval