Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →A PostgreSQL Interface for Databricks Data

Use the Remoting features of the Databricks JDBC Driver to create a PostgreSQL entry-point for data access.

There are a vast number of PostgreSQL clients available on the Internet. From standard Drivers to BI and Analytics tools, PostgreSQL is a popular interface for data access. Using our JDBC Drivers, you can now create PostgreSQL entry-points that you can connect to from any standard client.

To access Databricks data as a PostgreSQL database, use the CData JDBC Driver for Databricks and a JDBC foreign data wrapper (FDW). In this article, we compile the FDW, install it, and query Databricks data from PostgreSQL Server.

Connect to Databricks Data as a JDBC Data Source

To connect to Databricks as a JDBC data source, you will need the following:

- Driver JAR path: The JAR is located in the lib subfolder of the installation directory.

Driver class:

cdata.jdbc.databricks.DatabricksDriver- JDBC URL:

The URL must start with "jdbc:databricks:" and can include any of the connection properties in name-value pairs separated with semicolons.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

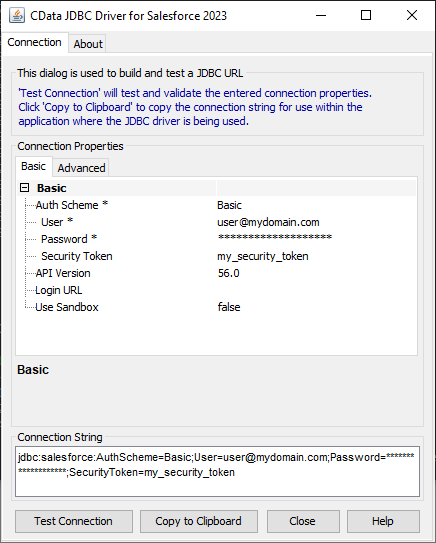

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Databricks JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.databricks.jarFill in the connection properties and copy the connection string to the clipboard.

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

A typical JDBC URL is below:

jdbc:databricks:Server=127.0.0.1;Port=443;TransportMode=HTTP;HTTPPath=MyHTTPPath;UseSSL=True;User=MyUser;Password=MyPassword;

Build the JDBC Foreign Data Wrapper

The Foreign Data Wrapper can be installed as an extension to PostgreSQL, without recompiling PostgreSQL. The jdbc2_fdw extension is used as an example (downloadable here).

- Add a symlink from the shared object for your version of the JRE to /usr/lib/libjvm.so. For example:

ln -s /usr/lib/jvm/java-6-openjdk/jre/lib/amd64/server/libjvm.so /usr/lib/libjvm.so - Start the build:

make install USE_PGXS=1

Query Databricks Data as a PostgreSQL Database

After you have installed the extension, follow the steps below to start executing queries to Databricks data:

- Log into your database.

-

Load the extension for the database:

CREATE EXTENSION jdbc2_fdw; -

Create a server object for Databricks:

CREATE SERVER Databricks FOREIGN DATA WRAPPER jdbc2_fdw OPTIONS ( drivername 'cdata.jdbc.databricks.DatabricksDriver', url 'jdbc:databricks:Server=127.0.0.1;Port=443;TransportMode=HTTP;HTTPPath=MyHTTPPath;UseSSL=True;User=MyUser;Password=MyPassword;', querytimeout '15', jarfile '/home/MyUser/CData/CData\ JDBC\ Driver\ for\ Salesforce MyDriverEdition/lib/cdata.jdbc.databricks.jar'); -

Create a user mapping for the username and password of a user known to the MySQL daemon.

CREATE USER MAPPING for postgres SERVER Databricks OPTIONS ( username 'admin', password 'test'); -

Create a foreign table in your local database:

postgres=# CREATE FOREIGN TABLE customers ( customers_id text, customers_City text, customers_CompanyName numeric) SERVER Databricks OPTIONS ( table_name 'customers');

postgres=# SELECT * FROM customers;