Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Data Engineering Resources

Data Replication Basics

Leaders, teams, and line-of-business analysts rely on reports, dashboards, and analytics tools to glean insights from their data, track performance, and make sound, data-driven decisions. Data warehouses, and by extension, data replication into those data warehouses, power these reports, dashboards, and analytics tools by storing data efficiently to minimize the input and output (I/O) of data and deliver query results quickly to hundreds and thousands of users concurrently. Enterprise data replication enables organizations to centralize data into a core data warehouse and share petabytes of data across hundreds, even thousands of users with critical security, governance, and availability.

In this article, we explain the basics of data replication, how the process works, and the benefits of using enterprise data replication software. Finally, we provide guiance on how to select the best solution for your specific use case. For more on data warehousing, see our enterprise data warehousing article.

What is Data Replication

Data replication is the process of copying enterprise data, typically from a number of enterprise data sources, into a central database or data warehouse for storage and on-demand use. The main purpose of data replication is to improve data availability and accessibility, in addition to system robustness and consistency. Data replication as a process improves the accessibility of data. All users given access will be able to share the exact same data, no matter where they are in the world.

Depending on the organization's requirements, the replication process can happen one time or it can be ongoing. A data replication solution is processing your data on an ongoing basis so each copy, no matter where it lives, remains accurate and updated to mirror its original source. The end result is a strategic set of data copies in key locations that users can access without having to worry about messing up their colleague's data or corrupting the original data source in any way.

The Benefits Data Replication Unlocks

There are many benefits you can expect from data replication. These are the core benefits and most common reasons why organizations take on data replication initiatives.

Data Reliability and Availability

Data replication enables data backup and storage, ensuring easy access to data for future and cross-organizational use. This is particularly crucial for multinational organizations, spread over different locations. If a hardware failure occurs, data is still available to other sites.

Disaster Recovery

The main benefit of replication appears in terms of disaster recovery and data protection. It ensures that a consistent backup is maintained in the event of a disaster or a system breach. So, if a system stops working you can still access the data from a different location.

Server Performance

Data replication can also enhance and boost server performance. This is because when companies run numerous data copies on different servers, users can access data much quicker.

Better Network Performance

Enable analysts and clients to retrieve data faster by keeping copies of the same data in various locations, where the transactions are being executed.

Data Centralization for 360 Degree Analytics

Usually, data-driven businesses duplicate data from numerous sources into their data stores, such as data warehouses or data lakes, to fuel their business intelligence. This makes it easier for analytics teams across various locations to work on shared projects.

Enhanced Test System Performance

Replication simplifies the distribution and synchronization of data for test systems that mandate quick accessibility for faster testing.

How Data Replication Workds: Types of Data Replication

Data replication works by copying data from one location to another, for example, between two on-premise hosts in the same or different locations. You can replicate data on-demand – in bulk or batches on a schedule. Data can be duplicated via various replication procedures.

Full Replication

Full replication means the entire data set from a given data source is replicated. This includes new, updated data as well as existing data that is copied from the source to the destination. This method of replication is generally associated with higher costs, since the processing power and network bandwidth requirements are high. However, full table replication can be beneficial when it comes to the recovery of hard-deleted data, as well as data lacking replication keys.

Partial Replication

Partial replication is an advanced replication process that replicates only the specified entries and a subset of attributes for the specified entries within a subtree. The entries and attributes that are to be replicated are specified by an LDAP administrator with the appropriate authority. With partial replication support, an LDAP administrator can allow entries that have certain object class values to be replicated to a consumer server.

Partial Replication is faster than full table replication because it deals with a comparatively smaller volume, which reduces network load and consistency issues. It is mainly useful for mobilized workforces such as insurance adjusters, financial planners, and sales people.

Continuous, or Incremenal, Replication

Continuous, or incremental, data replication is the process of replicating only new, or updated, data from data sources to a database or data warehouse. Continuous replication typically uses a continuous, live connection between the underlying data source(s) and destination(s), with scheduled updates to replicate any new data from the source(s) on a schedule.

Incremental replication saves bandwidth and time; if the process isn't incremental, then every replication copies the entire data source (snapshot). By not copying the entire data source every time, and only copying the data that's updated, you avoid hours waiting for syncs to complete. Unfortunately, not every data source supports incremental questioning (requests), so it's not always possible.

Log-Based Replication

Log-based incremental replication is a special case of replication that applies only to database sources. This process replicates data based on information from the database log file, which lists changes to the database. This method works best if the source database structure is relatively static. It reads data directly from the log files, reducing the load on the production system. If columns are added or removed, or if data types change, the configuration of the log-based system must be updated to reflect the changes. This is a time- and resource-intensive process, so, if you anticipate your source structure requiring frequent changes, it may be better to use full table or key-based replication.

Key-Based Replication

Key-based incremental replication, also known as key-based incremental loading, is a replication method in which the data sources identify new and updated data using a column called a replication key. Since fewer rows of data are copied during each update, key-based replication is more efficient than full table replication. However, one major limitation of key-based replication is its inability to replicate hard-deleted data, since the key value is deleted when the record is deleted.

Extraction, Loading, Transform Processes (ELT/ETL)

ELT stands for "Extract, Load, and Transform." In this process, data gets leveraged via a data warehouse in order to do basic transformations. That means there's no need for data staging. ELT uses cloud-based data warehousing solutions for all different types of data - including structured, unstructured, semi-structured, and even raw data types.

On the other hand, ETL is the Extract, Transform, and Load of data. With ETL, the data is unified/cleansed/deduplicated to conform to a universal model after it's collected and processed from various sources into one data store where it can then be later analyzed. Your company has access to many data sources but, more often than not, that data is presented in a way that is less useful to you. The results of that analysis can then be used to inform your business strategies and decisions.

Common Data Replication Approaches

Synchronous Remote Replication

Synchronous replication copies data to one or more secondary sites immediately as it is created. As a result, the duplicate system is guaranteed to have a copy available, eliminating any risk if accidentally lost. When data is being written to the primary logical unit number (LUN), a write request is also sent to the secondary LUN. After both primary LUN and secondary LUN return a write success response, the storage system returns a write success response to the host. This ensures real-time data synchronization between the primary and secondary LUNs.

Asynchronous Replication

Asynchronous replication copies data after the fact, or in non-real-time. Asynchronous replication is designed to work over long distances and uses less bandwidth. When writing data to the primary LUN, the primary site records the changed data. After the primary LUN returns a write success response, the primary site returns a write success response to the host. Then, data synchronization is performed manually or automatically based on user-defined trigger conditions to ensure data consistency between the primary and secondary LUNs.

SAN Level Replication

SAN to SAN replication is a service where a centralized repository of stored or archived data is duplicated to another centralized data repository in real-time. SAN-based replication is a great solution for enterprises looking to back up all or part of their infrastructure. The source and target in this case is not a VM though. It's an entire LUN. Typically, you have multiple VMs running on a single LUN, which is nice if the group of VMs require data/time consistency. VMware SRM uses SAN replication to achieve a fully orchestrated DR solution, and even physical servers with disks on the SAN can be replicated.

Guest-OS Level Replication

This type of replication duplicates data on a block-level basis to the targeted machine. The OS, application, and data can all be replicated on a block-level basis to a target machine. The OS files are "staged" on the target machine, and upon clicking the failover button, the target machine "becomes" the source machine. Pretty cool technology indeed.

Application level Replication

Application replication takes place at the transaction level: Each transaction is captured and duplicated on multiple systems. This offers a short RTO, or how long data can be inaccessible, and RPO, or how much data loss is acceptable. However, you must ensure your OS or Operating System is properly maintained at the time of failover. This replication works best with SQL databases.

Data Replication Challenges to Consider

Data replication is a complex technical process. It provides advantages for decision-making, but only if you ensure a smooth, consistent process. Here, we'll cover the biggest challenges to and what you can do about them.

Inconsistent Data

Controlling concurrent updates in a distributed environment is more complex than in a centralized environment. Replicating data from a variety of sources at different times can cause some datasets to be out of sync with others. With a centralized warehouse, all of the data only has one instance, so it's easier to know whether a data point is up-to-date. In a distributed environment, different datasets may be in different machines/locations, so you'll need to be sure that as you update data, all instances of the datasets are in sync and up-to-date. The replication process should be well-thought-through, reviewed, and revised as necessary to optimize the procedure.

Increased Storage Costs

Having the same data in more than one place consumes more storage space. It's important to take this cost into consideration when planning a data replication project.

Increased Processing and Network Costs

While reading data from distributed sites may be faster than reading from a more distant central location, writing to databases is a slower process. Replication updates can consume processing power and slow the network down. Efficiency in data and database replication can help manage the increased load. One approach to speed up the process is Exchange, Load, Transform, or ELT, which uses the processing power of the underlying database to transform the data after it's already loaded.

Choosing a replication process that fits your needs will help smooth out any bumps in the road.

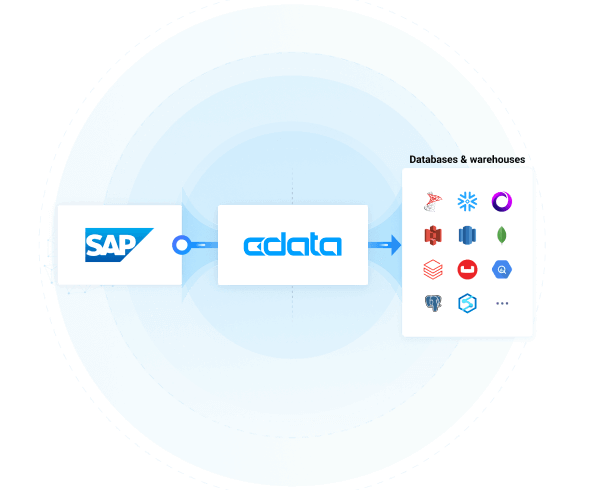

Introducing CData Sync: Seamless Data Replication Anywhere

CData Sync allows you to replicate data to every popular data warehouse and database destination used across organizations today, from more than 100+ enterprise data sources. With CData Sync, you can unify all your data sources and backup your data - and you don't have to write any code for your data replication process. Support every data replication and ETL/ELT process, easily automate and schedule replications, and simplify your data integration a& management today.

Download a free, 30-day free trial of CData Sync to get started with your data warehousing and repliation initiativess.

Ready to get started?

Automate data replication from any data source to any database or data warehouse with a few clicks.

Download for a free trial:

FREE TRIAL