What is Massively Parallel Processing (MPP)? Definition, Components, Pros & Cons

As big data becomes more integral to how organizations run, efficiently processing large amounts of information is crucial for organizations. Massively parallel processing (MPP) is a method that enables massive data sets to be processed in parallel, allowing faster and more efficient data analysis. But what exactly is MPP, and how does it work? In this article, we will explore the architecture of MPP systems, their role in the evolution of big data, and the challenges and advantages of implementing this architecture for your business. So, let's dive in and discover the power of massively parallel processing.

Today's organizations manage vast quantities of data that must be processed effectively to gain accurate insights. MPP architecture offers an ideal solution.

What is massively parallel processing (MPP)?

Massively parallel processing is the action of speeding up a computational task by dividing it into smaller jobs across multiple processors. Typically, MPP processors communicate through a messaging interface. Up to 200 or more processors can work on the same application in some implementations. An interconnected arrangement of data paths allows messages to be sent between processors. The setup for MPP is more complicated, requiring additional consideration about how to partition a shared database among processors and how to assign work among the processors. An MPP system is a "loosely coupled" or "shared nothing" system.

Massively parallel refers to using many computer processors to carry out coordinated calculations simultaneously. Graphics processing units (GPUs) are examples of massively parallel architectures capable of simultaneously managing tens of thousands of threads. One method for utilizing this processing power is through grid computing, which harnesses the capabilities of many computers across various distributed and diverse administrative domains whenever they are available.

MPP distributes workloads across multiple processors to execute tasks simultaneously, which is crucial for large-scale data management. This architecture enhances performance and scalability by allowing complex queries to be processed quickly. It achieves this by breaking down queries into smaller tasks that can be executed concurrently across various nodes.

An MPP system is considered better than a symmetrically parallel system (SMP) for applications that allow several databases to be searched in parallel. These include decision support systems and data warehouse applications. With MPP, businesses can leverage data to unlock valuable insights and enhance intelligent decision-making. From business intelligence to machine learning, MPP architecture provides scalable processing capabilities for managing massive data.

The main advantage of MPP is its ability to distribute data across multiple processors, allowing for concurrent processing. This feature enables seamless scalability, meaning that the processing time remains relatively unaffected even as the amount of data increases. Additionally, MPP systems are fault-tolerant, which allows them to continue operating even if some processors fail.

Core components of an MPP architecture

MPP systems consist of independent nodes, each with its own operating system, which creates an efficient model. This allows multiple users within a company to run queries in a data warehouse simultaneously without experiencing lengthy response times. Additionally, MPP databases are widely used to centralize large amounts of data in data warehouses. IT personnel and teams can access the same centralized data assets from different locations.

An MPP system has dedicated resources with hundreds of processors, each equipped with its own operating system and memory.

There are two types of MPP database architecture: grid computing and computer clustering. Grid computing enables users to leverage multiple computers across a distributed network. In contrast, computer clustering connects nodes that can handle multiple tasks simultaneously by communicating with each other. Below are the core components of MPP.

Processing nodes are the fundamental components of MPP. Nodes could be desktop PCs, virtual servers, or simple processing codes with multiple processing units installed.

High-speed interconnects or buses are low-latency, high-bandwidth connections between nodes, including ethernet, fiber-distributed data interfaces, and proprietary methods.

Distributed lock manager (DLM) enables resource sharing among nodes with shared disk space. It handles resource requests and allocates them when available while managing node failures to address data inconsistency and recovery.

To learn more about the differences between the data stores that might use MPP, check out our blogs: Azure Synapse vs Azure SQL DB: 8 Crucial Differences and Amazon Redshift vs. DynamoDB: 8 Key Differences & Benefits.

Pros and cons of MPP

Because data processing demands are constantly growing, parallel computing has become a transformative force, allowing multiple tasks or processes to run simultaneously, greatly improving performance and efficiency. However, parallel computing also presents challenges, such as the need for careful design, synchronization overhead, and potential costs related to specialized hardware.

Pros:

Increased performance is one of the main benefits of parallel computing. By distributing tasks across multiple processing units, it can handle complex calculations and data-intensive operations much faster than sequential computing.

Scalability is an advantage of parallel computing, allowing it to manage larger workloads as more processing units are added. With technological advancements and the availability of more powerful processors, parallel computing can effectively utilize these resources. This results in faster and more efficient data processing and task execution.

Resource utilization is a key advantage of parallel computing. It optimizes the use of multiple processing units simultaneously, ensuring that processing power is maximized and that hardware resources are used efficiently.

Cons:

Managing complexity when implementing parallel processing can be difficult. Developing algorithms and programs that are effectively parallelized requires careful design and consideration of task dependencies. Additionally, debugging and testing these programs can be more complicated than sequential ones.

Synchronization and fault tolerance issues can arise in parallel computing, where tasks often need to communicate and synchronize with one another. This can lead to complexities and potential bottlenecks, ultimately impeding overall performance. It is essential to implement efficient synchronization mechanisms and effective load balancing to address these issues.

High infrastructure costs can be significant. Parallel processing often requires specialized hardware, such as multi-core processors, GPUs, or clusters of interconnected computers. Acquiring and maintaining such infrastructure can be expensive, particularly for smaller organizations or individuals.

Despite its disadvantages, MPP remains a critical and indispensable technique, paving the way for faster, more efficient, and scalable computing for enterprises.

MPP use cases and applications

The ability to efficiently process and analyze large datasets has become essential in the rapidly evolving fields of artificial intelligence, machine learning, and data science. MPP is applicable across various sectors, including finance, healthcare, e-commerce, and more.

These are just a few examples; the applications of MPP are vast and diverse, limited only by the imagination and requirements of the problem at hand.

|

Use Case

|

Description

|

|

Recommendation systems

|

MPP facilitates quick analysis of user behavior and preferences, enabling personalized recommendations at scale for e-commerce platforms and streaming services.

|

|

Genomics

|

Genomic research generates massive amounts of data. MPP accelerates the analysis of DNA sequences, aiding in the discovery of genetic patterns and potential treatments for diseases.

|

|

Business intelligence

|

MPP databases excel in processing large datasets for complex business intelligence queries. They can analyze vast amount of data, enabling organizations to gain valuable insights for decision-making.

|

|

Large datasets

|

MPP databases are ideal for processing large datasets efficiently. They distribute the data program across multiple processing nodes, enabling parallel processing and reducing response times for data-intensive tasks.

|

|

Complex searches

|

MPP databases excel at handling complex searches due to their parallel processing capabilities. They can process large amounts of data in parallel, allowing for faster search results.

|

|

Data warehousing

|

MPP systems are ideal for data warehousing solutions, where they can manage and analyze vast amounts of historical data, enabling organizations to generate insights and reports rapidly.

|

Massively parallel processing with CData Virtuality

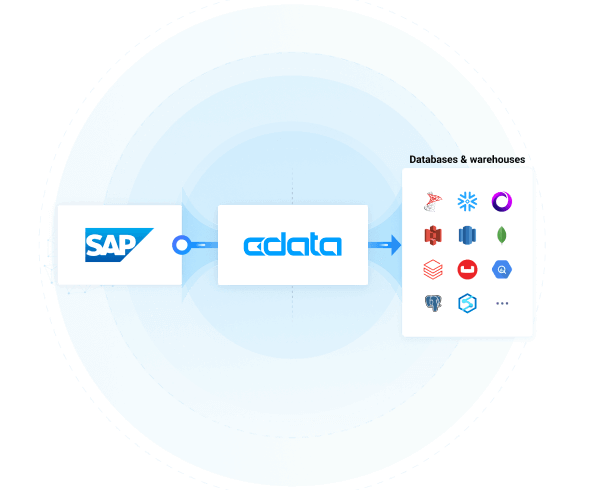

CData Virtuality enables businesses to fully harness the power of massively parallel processing, optimizing data performance and scalability. Virtuality gives your enterprise the power to process large datasets by employing parallel algorithms, efficient data partitioning, and smooth load balancing—boosting analysis, decision-making, and overall efficiency. CData Virtuality provides your organization with the tools to turn big data into actionable insights, maximizing your processing capabilities for competitive advantage.

Explore CData Virtuality today

Get an interactive product tour to experience how to uplevel your enterprise data management strategy with powerful data virtualization and integration.