Data Streaming: A Complete Overview with Benefits, Challenges, Use Cases & Examples

Organizations rely on data to keep business running smoothly. While traditional ETL (extract, transform, load) processes are important for everyday business operations, some data needs to be processed quickly to act on it effectively. The faster data can be processed, the more agile the business response can be.

Data streaming is nothing new. It’s mainly discussed in the context of watching movies over the internet or playing online games with friends scattered across countries. But putting data streaming into the context of business is no less transformative. It empowers organizations to handle massive flows of data in real time, driving instant insights, enhancing customer experiences, and enabling swift responses to ever-changing conditions. Far beyond entertainment, data streaming is becoming a cornerstone of modern business strategies.

In this blog post, we’ll go into the details of data streaming—what it entails, the differences between it and batch processing, the benefits, use cases, and more. We hope this will explain how data streaming is reshaping industries and how you can use it to stay ahead.

What is data streaming?

Data streaming is the continuous, real-time flow of data from various sources, such as sensors, social media feeds, financial transactions, or IoT (Internet of Things) devices. Unlike traditional batch processing where data is collected over a period and then processed in bulk, data streaming allows for immediate processing as it arrives, often within milliseconds. The data streams themselves are continuous sequences of data elements that are processed in motion rather than at rest. This ability to handle data on the fly is what sets data streaming apart from more traditional data processing methods.

Key components of data streaming

In a typical data processing setup, information flows through a series of stages:

- Data ingestion: Raw data is collected from various sources and funneled into the streaming system. The data can come from sensors, social media platforms, transaction logs, etc. Effective data ingestion tools are designed to handle high volumes of data with minimal latency, ensuring that no information is lost or delayed.

- Data storage: While streaming focuses on real-time processing, data often needs to be stored for later analysis, recordkeeping, or compliance purposes. Data storage in a streaming context must be scalable and efficient, capable of handling the ongoing data flow without bottlenecks. Storage systems should be optimized for speed and capacity to accommodate continuous data streams.

- Data processing: Data processing is the heart of the streaming architecture. This is where incoming data is analyzed, filtered, and transformed immediately. Depending on the use case, this can involve simple operations like aggregating data or complex analytics like predictive modeling. The goal is to extract actionable insights from data as it arrives, accelerating decision-making.

Batch processing vs. stream processing: Key differences

Understanding the differences between batch and stream processing can help you choose the right approach for your data needs. While both methods have their merits, they serve different purposes and excel in different scenarios.

Here are the key factors that distinguish them:

| Factor |

Batch Processing |

Stream Processing |

|

Data volume

|

Processes large volumes of data collected over time.

|

Handles continuous streams of data in smaller, real-time chunks.

|

|

Data processing

|

Processes data in batches at scheduled intervals.

|

Processes data instantly as it arrives.

|

|

Time latency

|

Higher latency is due to the wait for data collection.

|

Minimal latency, providing near-instant results.

|

|

Implementation complexity

|

Generally simpler to implement but requires more storage and processing time.

|

More complex due to the need for real-time processing infrastructure.

|

|

Analytics complexity

|

Suitable for historical analysis and complex queries on large datasets.

|

Ideal for real-time analytics and immediate decision-making.

|

|

Cost

|

Can be cost-effective for non-time-sensitive data.

|

May involve higher costs due to real-time processing needs.

|

|

Use cases

|

Best for reporting, data warehousing, and offline analytics.

|

Best for real-time monitoring, fraud detection, and personalized experiences.

|

Batch processing is traditionally used for scenarios where time isn’t critical, like generating end-of-day reports or performing large-scale data migrations. In stark contrast, stream processing shows its strength in environments where immediate data insights and actions are required.

5 Benefits of data streaming

Data streaming offers a range of powerful benefits that can transform how businesses operate. Real-time data processing and analysis improve organizational agility by allowing organizations to adapt to rapid changes, fine-tune offerings, and respond to market trends before the competition does.

- Real-time insights and decision-making: One of the most significant benefits of data streaming is gaining real-time insights. Whether monitoring user activity on a website or tracking financial transactions, businesses can act on data as it happens, leading to quicker, more accurate decisions.

- Improved operational efficiency: Data streaming reduces the lag time between data collection and action, streamlining operations. Potential issues are identified and resolved immediately, leading to smoother, more responsive operations.

- Enhanced customer experience: Data streaming allows businesses to offer personalized experiences by analyzing customer behavior in real time. For example, streaming data can be used to tailor recommendations, provide real-time support, or deliver targeted promotions, all contributing to higher customer satisfaction.

- Increased revenue and profitability: Instant analysis from real-time data enables businesses to capitalize on opportunities as they arise, whether it’s optimizing pricing strategies, reducing waste in supply chains, or detecting fraud as soon as it happens.

- Reduced data storage costs: While some storage is needed for archival purposes, data streaming eliminates the need for extensive storage for pre-processed data. This lowers overall costs for data storage, which traditional data processing would require.

Challenges of data streaming

Along with its benefits, data streaming can also present challenges that need serious consideration before implementation. A solid plan will alleviate the vast majority of issues to ensure the best performance of your data streaming architecture.

- Data quality and consistency issues: In a streaming environment, data is ingested and processed in real time, which can lead to inconsistencies or errors if the incoming data is incomplete or malformed. Ensuring data quality in a continuous stream is more complex than in batch processing, where data can be validated and cleaned before analysis. Prepare for this by implementing real-time data validation and error-handling mechanisms to catch and correct issues as the data is processed.

- Scalability concerns: As the volume of streaming data grows, streaming systems must be able to scale horizontally to manage spikes in data flow without compromising performance or data integrity. This often requires careful planning and investment in robust infrastructure. For many organizations, cloud-based services offer an answer by providing flexible, on-demand resources, allowing them to scale their infrastructure as needed.

- Infrastructure and cost considerations: Real-time data processing demands significant investments in modern technology—powerful servers, fast network connections, and, in some cases, artificial intelligence (AI)—which can quickly exceed budgets if not planned meticulously. Consider managed services to reduce the need for in-house architecture and expertise.

- Latency and performance management: One of the main advantages of data streaming is low latency, but achieving this requires careful performance management. Any delays in processing can undermine any benefits of real-time insights, so continuous monitoring and optimization are needed to keep latency at a minimum. Real-time optimization strategies and performance monitoring tools will help keep things running smoothly.

- Data security and privacy concerns: Data in constant flow increases the potential attack surface, making it more susceptible to breaches and presenting a greater challenge than securing static data. Stringent security measures for managing sensitive information, including encryption, access controls, and real-time data monitoring, will help prevent, detect, and respond quickly to potential threats.

Data streaming use cases and examples

Data streaming is a versatile technology that can be applied across various industries to drive real-time insights and actions. Here are just a few use cases that illustrate how data streaming is being used today:

- Real-time fraud detection: Monitoring transactions as they happen allows organizations to identify suspicious activities, which trigger instant responses to prevent fraud. For example, streaming data can help banks detect unusual spending patterns and block transactions before they’re completed.

- IoT data processing: Massive amounts of data from connected devices, including sensors, wearables, and smart appliances, are processed in real time. This permits businesses to monitor and control devices, optimize energy usage, or provide instant feedback to users. Smart cities use streaming data from traffic sensors to manage congestion and improve public safety.

- Customer behavior analysis: Data streamed from websites, apps, and social media provides insights into what customers are doing at any given moment. This helps organizations adjust marketing strategies, personalize recommendations, or optimize content delivery. Streaming data allows Immediate response to customer needs, improving overall satisfaction and loyalty.

- Financial market analysis: Financial markets are highly dynamic, with prices and trends changing rapidly throughout the day. Data streaming gives traders and analysts access to real-time market data, enabling them to make informed decisions on buying or selling assets. This can make the difference between profit and loss in fast-paced trading environments.

- Supply chain optimization: Supply chains are complex networks that require constant monitoring to ensure efficiency. Organizations can track the movement of goods, monitor inventory levels, and predict potential disruptions before they emerge, optimizing their operations, reducing costs, and improving delivery times.

Popular data streaming technologies and platforms

Several options have been developed for data streaming over the years. The technology is still advancing, with new approaches to different business needs. Below is a short list of some of the more widely used data streaming technologies and how they can support your organization's real-time streaming needs:

Amazon Kinesis

Offered by AWS, Amazon Kinesis is a powerful service for collecting, processing, and analyzing real-time data streams at scale. It’s well-suited for use cases like real-time analytics, log and event data processing, and live application monitoring. Kinesis integrates seamlessly with other AWS services, making it a smart choice for organizations already using the AWS ecosystem.

Apache Kafka

Apache Kafka is an open-source distributed event streaming platform known for its high throughput, scalability, and durability. Originally developed by LinkedIn, Kafka is now widely used across industries for building real-time data pipelines, event sourcing, and stream processing applications. Kafka can handle millions of events per second, making it a prime choice for large-scale, mission-critical data streaming projects.

Apache Flink

Designed specifically for stream processing, Apache Flink is an open-source platform capable of complex event processing and real-time analytics. Flink is often used when low-latency and fault-tolerant processing are critical, such as IoT applications, fraud detection, and network monitoring.

Google Cloud Dataflow

Built on Apache Beam, Google Cloud Dataflow is a fully managed service that supports both batch and stream processing. Dataflow is particularly useful for organizations leveraging Google Cloud, as it integrates well with services like BigQuery and Cloud Pub/Sub, offering a comprehensive solution for processing large datasets in real time.

Spark Streaming

An extension of Apache Spark, Spark Streaming enables real-time data processing within the same framework used for batch data. Known for its scalability and fault tolerance, Spark Streaming is commonly used for real-time analytics, machine learning, and ETL pipelines, making it a popular choice for organizations looking to unify their data processing workflows.

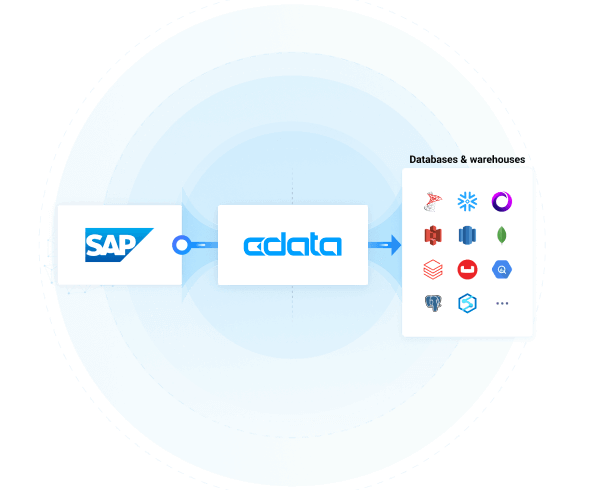

Boost your data streaming capabilities with CData Virtuality

CData Virtuality offers comprehensive data streaming support, enabling seamless virtualized connectivity to over 200 enterprise data sources, including popular streaming services like Amazon Kinesis, Apache Kafka, and Google Cloud Dataflow. CData Virtuality handles the complexities of data connectivity and integration so you don’t have to, ensuring your data streams are always accessible and actionable.

Explore CData Virtuality today

Get an interactive product tour to experience how to uplevel your enterprise data management strategy with powerful data virtualization and integration.