Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Prepare, Blend, and Analyze Spark Data in Alteryx Designer

Build workflows to access live Spark data for self-service data analytics.

The CData ODBC driver for Spark enables access to live data from Spark under the ODBC standard, allowing you work with Spark data in a wide variety of BI, reporting, and ETL tools and directly, using familiar SQL queries. This article shows how to connect to Spark data using an ODBC connection in Alteryx Designer to perform self-service BI, data preparation, data blending, and advanced analytics.

The CData ODBC drivers offer unmatched performance for interacting with live Spark data in Alteryx Designer due to optimized data processing built into the driver. When you issue complex SQL queries from Alteryx Designer to Spark, the driver pushes supported SQL operations, like filters and aggregations, directly to Spark and utilizes the embedded SQL engine to process unsupported operations (often SQL functions and JOIN operations) client-side. With built-in dynamic metadata querying, you can visualize and analyze Spark data using native Alteryx data field types.

Connect to Spark Data

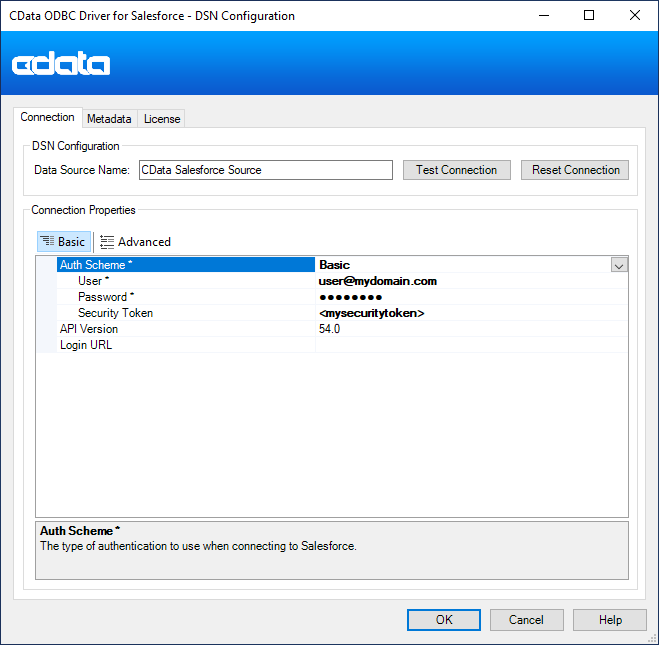

- If you have not already done so, provide values for the required connection properties in the data source name (DSN). You can configure the DSN using the built-in Microsoft ODBC Data Source Administrator. This is also the last step of the driver installation. See the "Getting Started" chapter in the Help documentation for a guide to using the Microsoft ODBC Data Source Administrator to create and configure a DSN.

Set the Server, Database, User, and Password connection properties to connect to SparkSQL.

When you configure the DSN, you may also want to set the Max Rows connection property. This will limit the number of rows returned, which is especially helpful for improving performance when designing reports and visualizations.

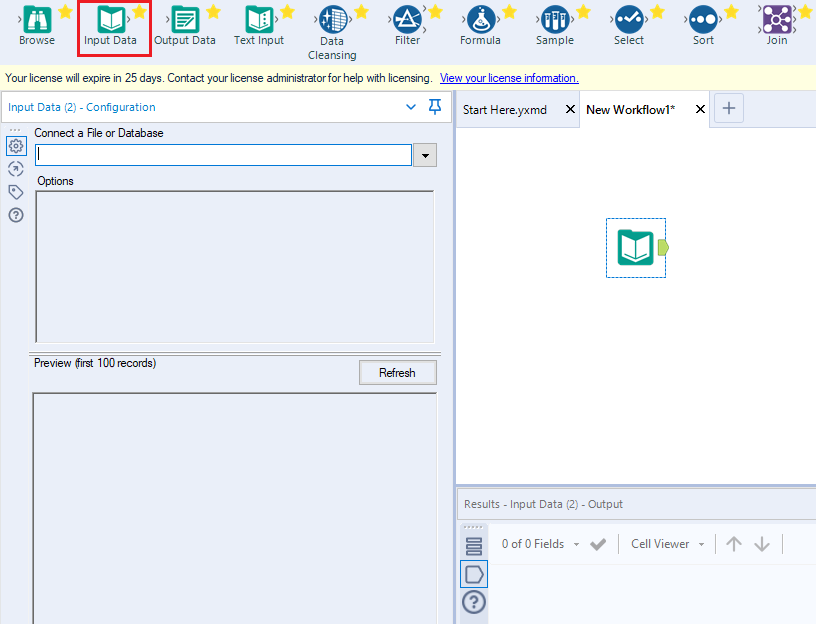

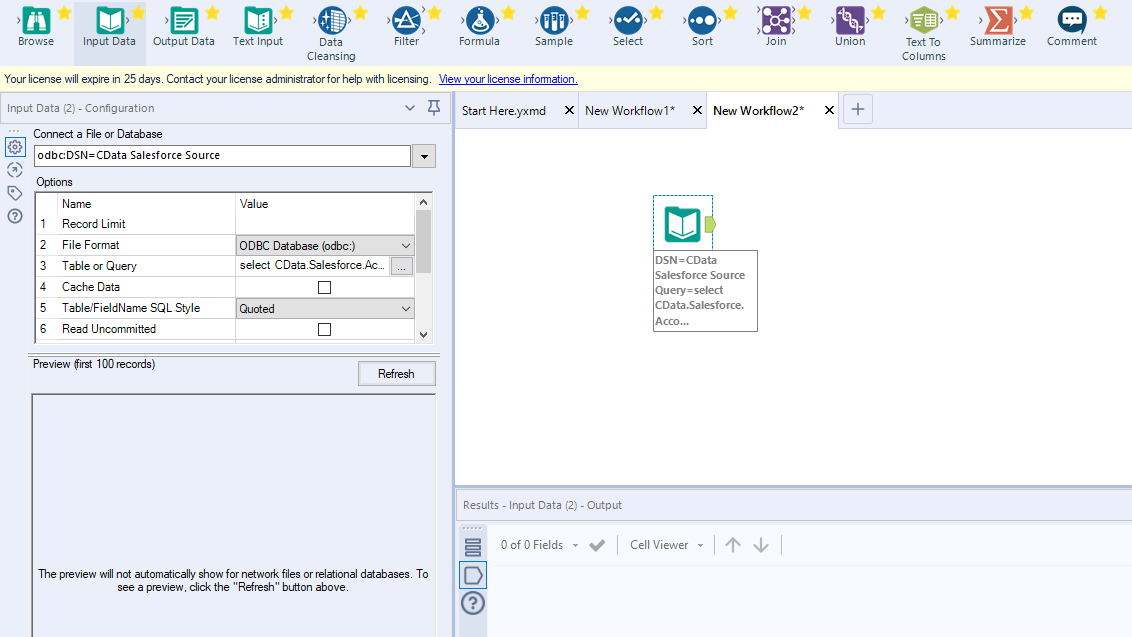

- Open Alteryx Designer and create a new workflow.

- Drag and drop a new input data tool onto the workflow.

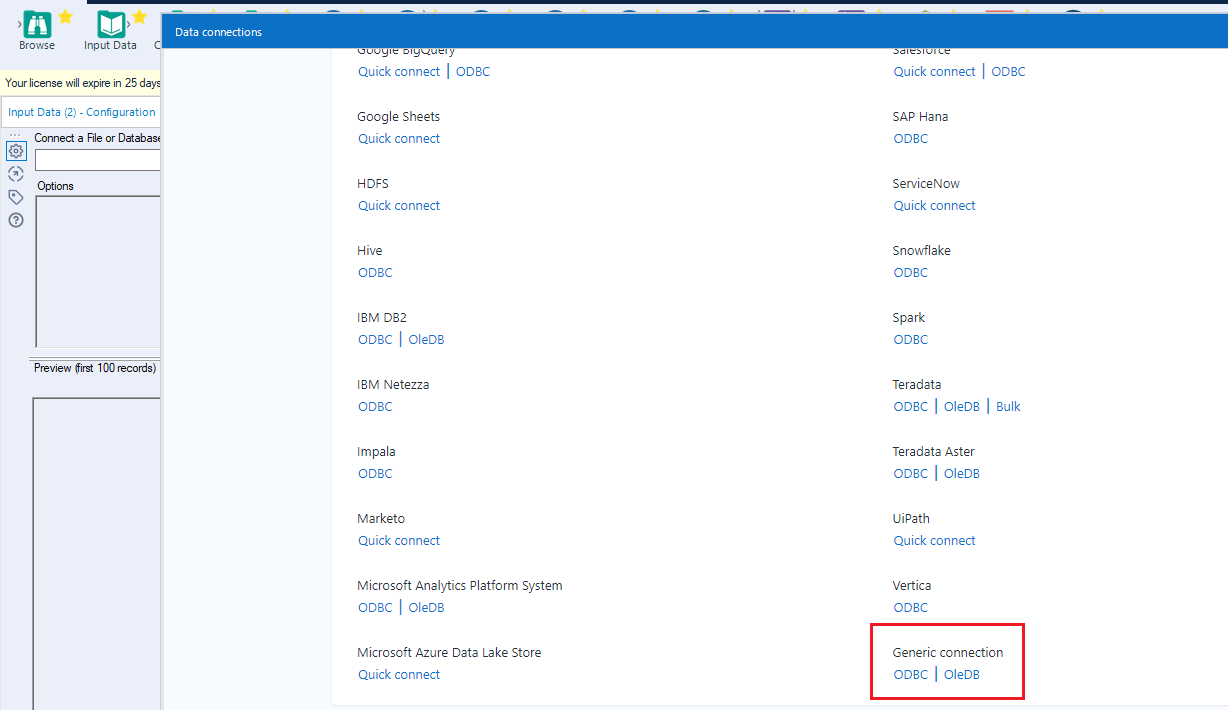

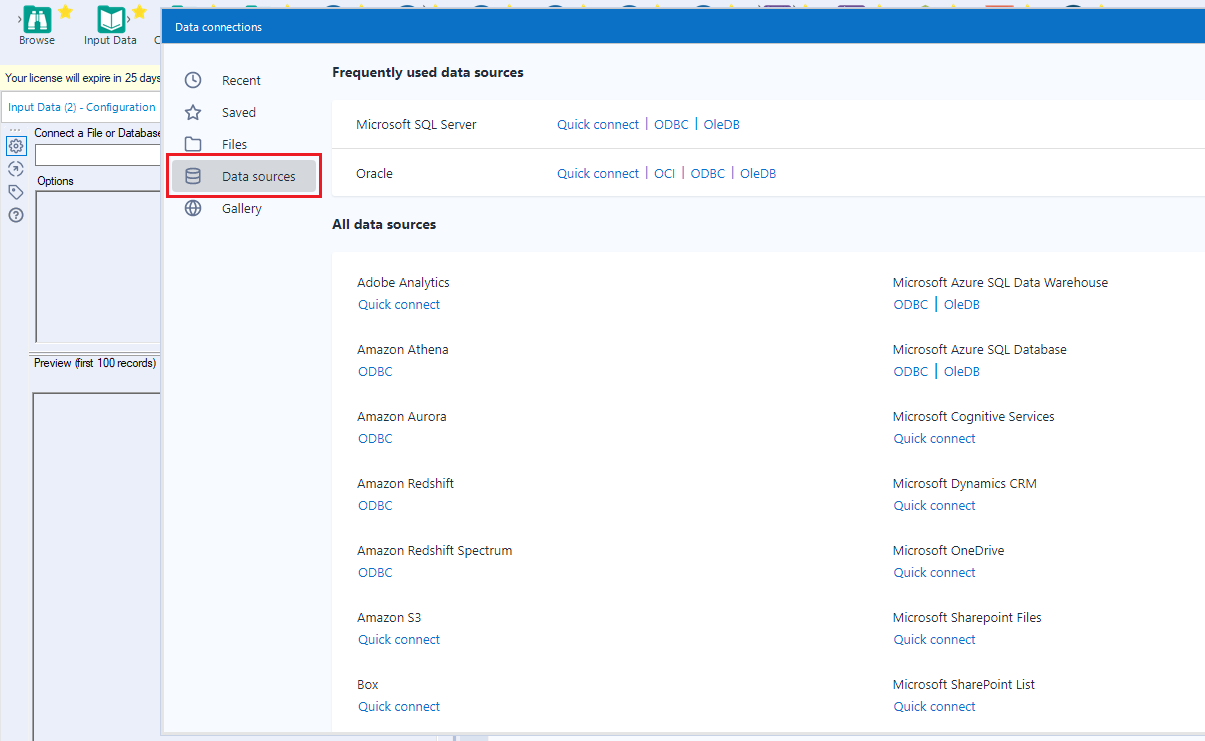

- Click the drop down under Connect a File or Database and select the Data sources tab.

- Navigate tot he end of the page and click on "ODBC" under "Generic connection"

![Select New ODBC Connection.]()

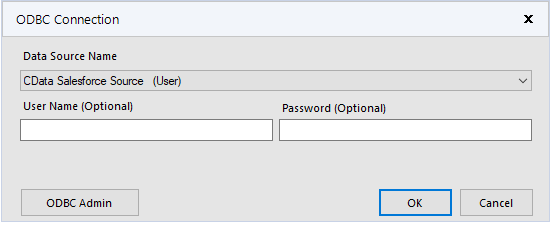

- Select the DSN (CData Spark Source) that you configured for use in Alteryx.

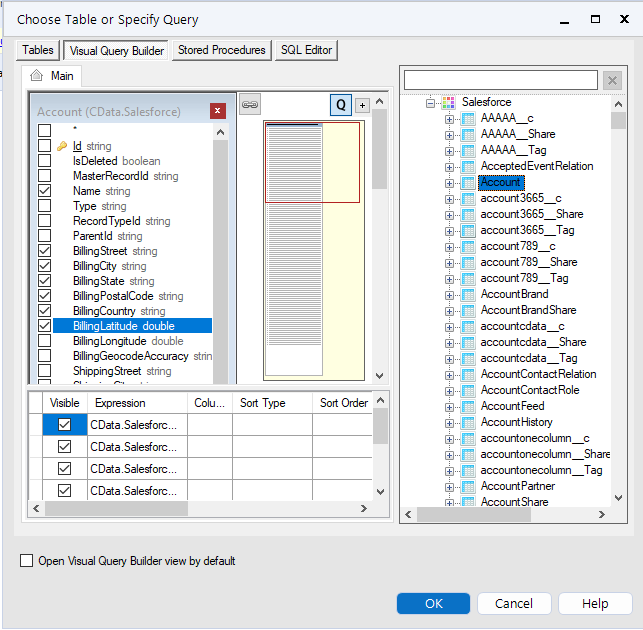

- In the wizard that opens, drag, and drop the table to be queried in the "Query Builder box." Select the fields by checking the boxes that you wish to include in your query. Where possible, the complex queries generated by the filters and aggregations will be pushed down to Spark, while any unsupported operations (which can include SQL functions and JOIN operations) will be managed client-side by the CData SQL engine embedded in the connector.

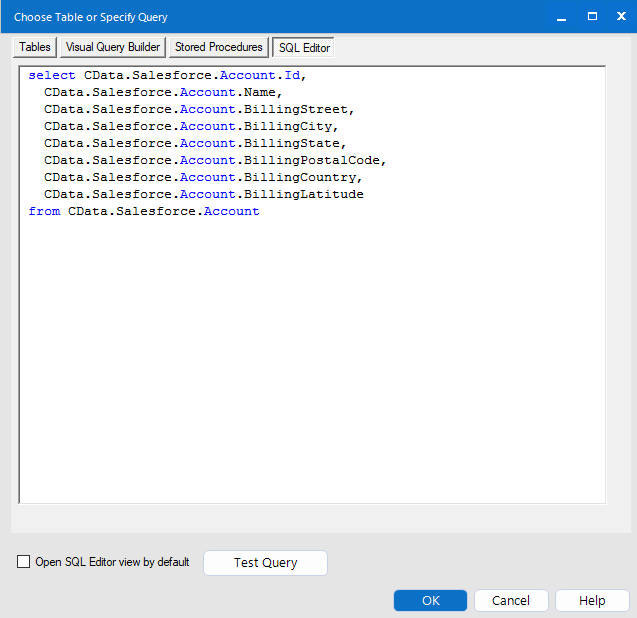

If you wish to further customize your dataset, you can open the SQL Editor and modify the query manually, adding clauses, aggregations, and other operations to ensure that you are retrieving exactly the Spark data you want .

With the query defined, you are ready to work with Spark data in Alteryx Designer.

Perform Self-Service Analytics on Spark Data

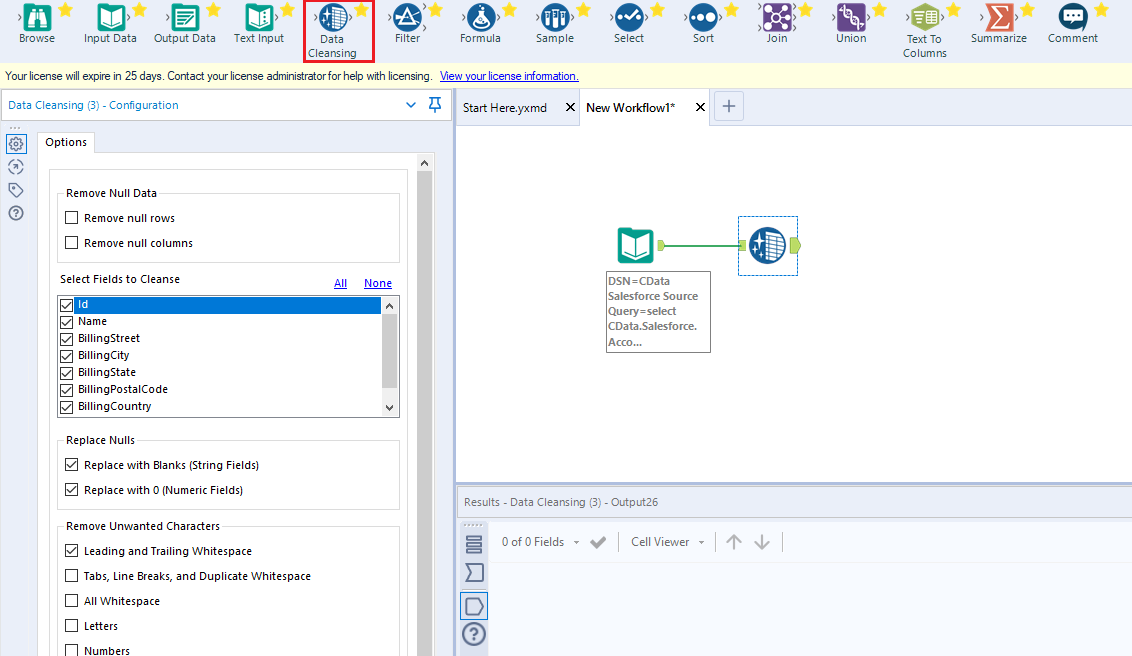

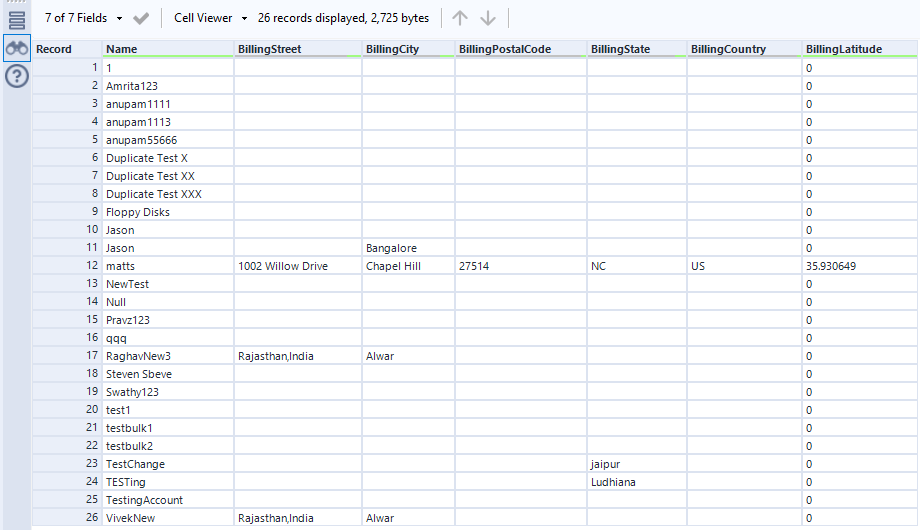

You are now ready to create a workflow to prepare, blend, and analyze Spark data. The CData ODBC Driver performs dynamic metadata discovery, presenting data using Alteryx data field types and allowing you to leverage the Designer's tools to manipulate data as needed and build meaningful datasets. In the example below, you will cleanse and browse data.

- Add a data cleansing tool to the workflow and check the boxes in Replace Nulls to replace null text fields with blanks and replace null numeric fields with 0. You can also check the box in Remove Unwanted Characters to remove leading and trailing whitespace.

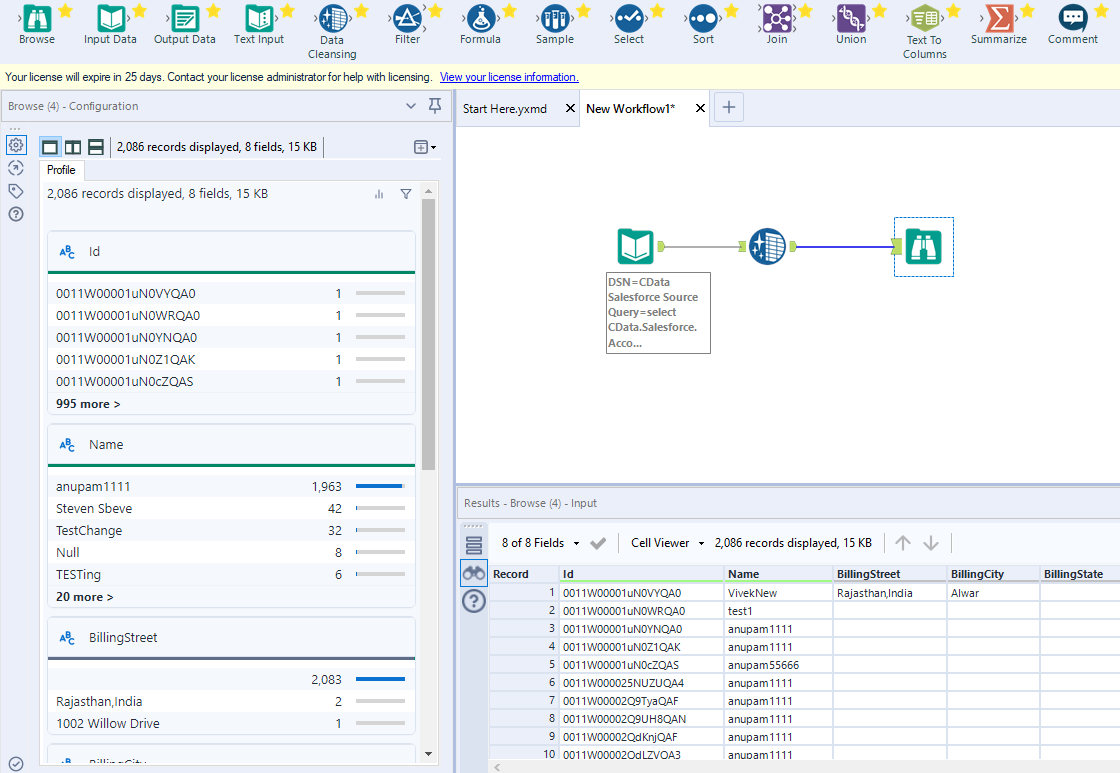

- Add a browse data tool to the workflow.

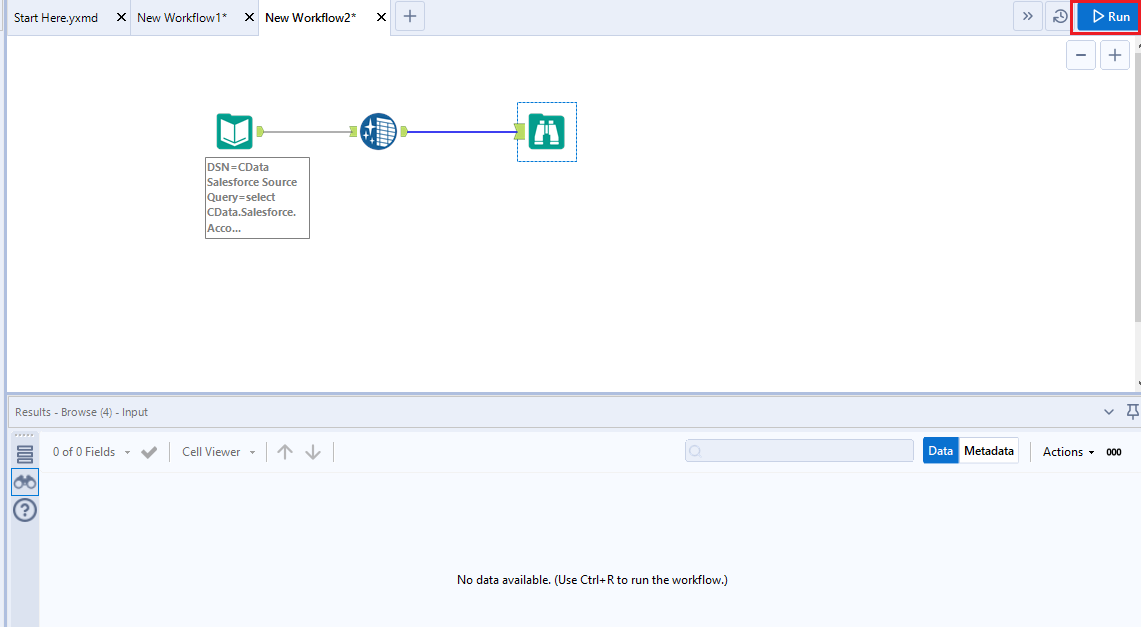

- Click to run the workflow (CTRL+R).

- Browse your cleansed Spark data in the results view.

Thanks to built-in, high-performance data processing, you will be able to quickly cleanse, transform, and/or analyze your Spark data with Alteryx.