Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Access Live Kafka Data in AWS Lambda

Connect to live Kafka data in AWS Lambda using the CData JDBC Driver.

AWS Lambda is a compute service that lets you build applications that respond quickly to new information and events. AWS Lambda functions can work with live Kafka data when paired with the CData JDBC Driver for Apache Kafka. This article describes how to connect to and query Kafka data from an AWS Lambda function built in Eclipse.

At the time this article was written (June 2022), Eclipse version 2019-12 and Java 8 were the highest versions supported by the AWS Toolkit for Eclipse.

With built-in optimized data processing, the CData JDBC Driver offers unmatched performance for interacting with live Kafka data. When you issue complex SQL queries to Kafka, the driver pushes supported SQL operations, like filters and aggregations, directly to Kafka and utilizes the embedded SQL engine to process unsupported operations client-side (often SQL functions and JOIN operations). In addition, its built-in dynamic metadata querying allows you to work with and analyze Kafka data using native data types.

Gather Connection Properties and Build a Connection String

Set BootstrapServers and the Topic properties to specify the address of your Apache Kafka server, as well as the topic you would like to interact with.

Authorization Mechanisms

- SASL Plain: The User and Password properties should be specified. AuthScheme should be set to 'Plain'.

- SASL SSL: The User and Password properties should be specified. AuthScheme should be set to 'Scram'. UseSSL should be set to true.

- SSL: The SSLCert and SSLCertPassword properties should be specified. UseSSL should be set to true.

- Kerberos: The User and Password properties should be specified. AuthScheme should be set to 'Kerberos'.

You may be required to trust the server certificate. In such cases, specify the TrustStorePath and the TrustStorePassword if necessary.

NOTE: To use the JDBC driver in an AWS Lambda function, you will need a license (full or trial) and a Runtime Key (RTK). For more information on obtaining this license (or a trial), contact our sales team.

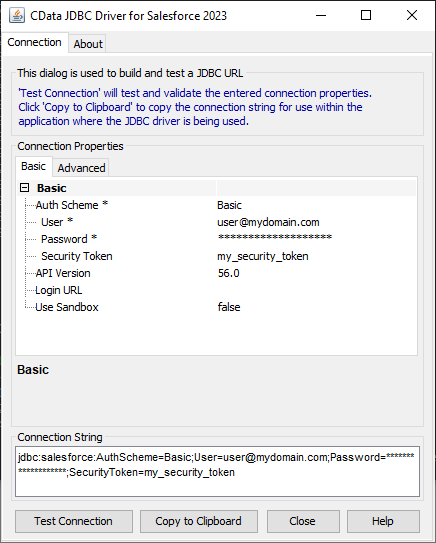

Built-in Connection String Designer

For assistance constructing the JDBC URL, use the connection string designer built into the Kafka JDBC Driver. Double-click the JAR file or execute the jar file from the command line.

java -jar cdata.jdbc.apachekafka.jar

Fill in the connection properties (including the RTK) and copy the connection string to the clipboard.

Create an AWS Lambda Function

- Download the CData JDBC Driver for Apache Kafka installer, unzip the package, and run the JAR file to install the driver.

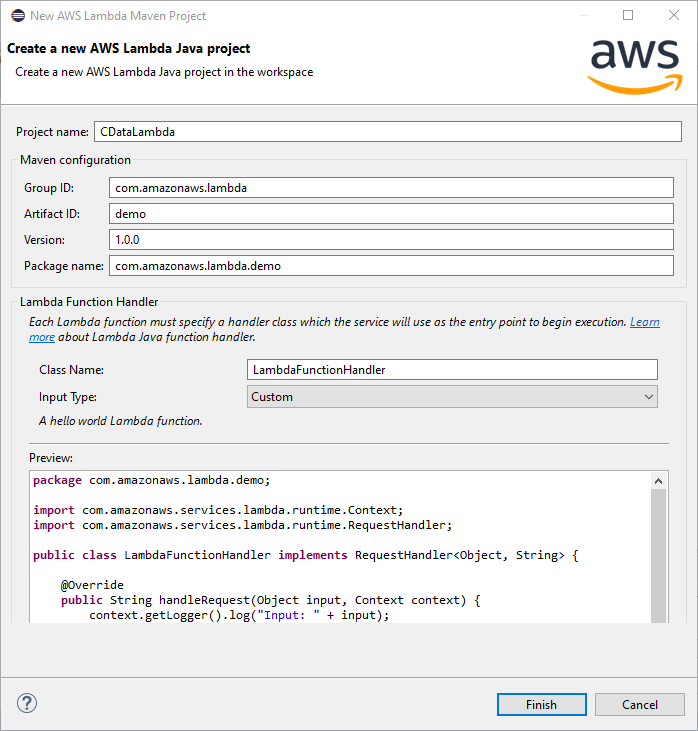

Create a new AWS Lambda Java Project in Eclipse using the AWS Toolkit for Eclipse. You can follow the tutorial from AWS (amazon.com).

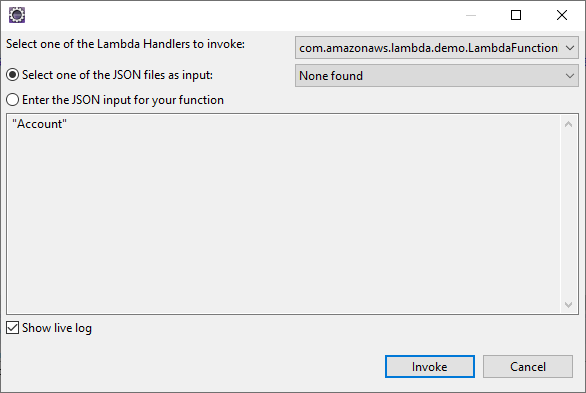

For this article, set the Input Type for the project to "Custom" so we can enter a table name as the input.

![Creating a new AWS Lambda Java project]()

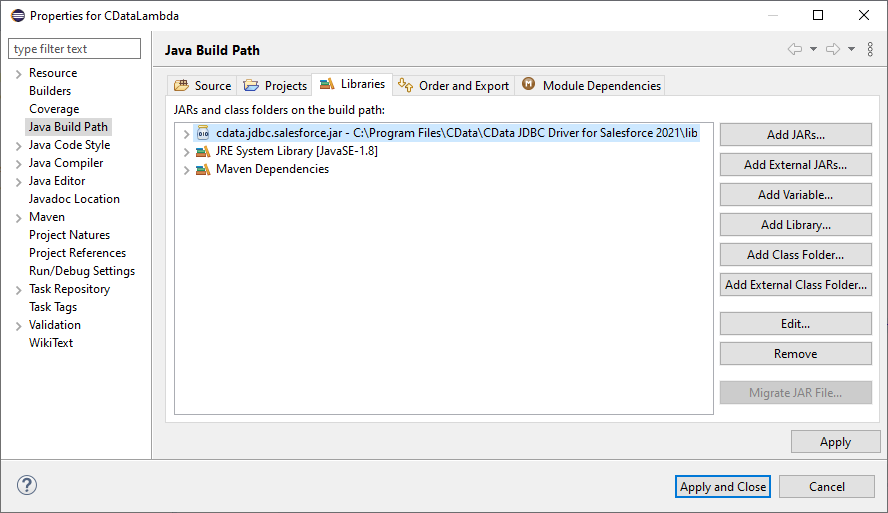

- Add the CData JDBC Driver for Apache Kafka JAR file (cdata.jdbc.apachekafka.jar) to the build path. The file is found in INSTALL_PATH\lib\.

![Adding the JDBC Driver JAR file]()

- Add the following import statements to the Java class:

import java.sql.Connection; import java.sql.DriverManager; import java.sql.ResultSet; import java.sql.ResultSetMetaData; import java.sql.SQLException; import java.sql.Statement; Replace the body of the handleRequest method with the code below. Be sure to fill in the connection string in the DriverManager.getConnection method call.

String query = "SELECT * FROM " + input; try { Class.forName("cdata.jdbc.apachekafka.ApacheKafkaDriver"); } catch (ClassNotFoundException ex) { context.getLogger().log("Error: class not found"); } Connection connection = null; try { connection = DriverManager.getConnection("jdbc:cdata:apachekafka:RTK=52465...;User=admin;Password=pass;BootStrapServers=https://localhost:9091;Topic=MyTopic;"); } catch (SQLException ex) { context.getLogger().log("Error getting connection: " + ex.getMessage()); } catch (Exception ex) { context.getLogger().log("Error: " + ex.getMessage()); } if(connection != null) { context.getLogger().log("Connected Successfully!\n"); } ResultSet resultSet = null; try { //executing query Statement stmt = connection.createStatement(); resultSet = stmt.executeQuery(query); ResultSetMetaData metaData = resultSet.getMetaData(); int numCols = metaData.getColumnCount(); //printing the results while(resultSet.next()) { for(int i = 1; i <= numCols; i++) { System.out.printf("%-25s", (resultSet.getObject(i) != null) ? resultSet.getObject(i).toString().replaceAll("\n", "") : null ); } System.out.print("\n"); } } catch (SQLException ex) { System.out.println("SQL Exception: " + ex.getMessage()); } catch (Exception ex) { System.out.println("General exception: " + ex.getMessage()); } String output = "query: " + query + " complete"; return output;

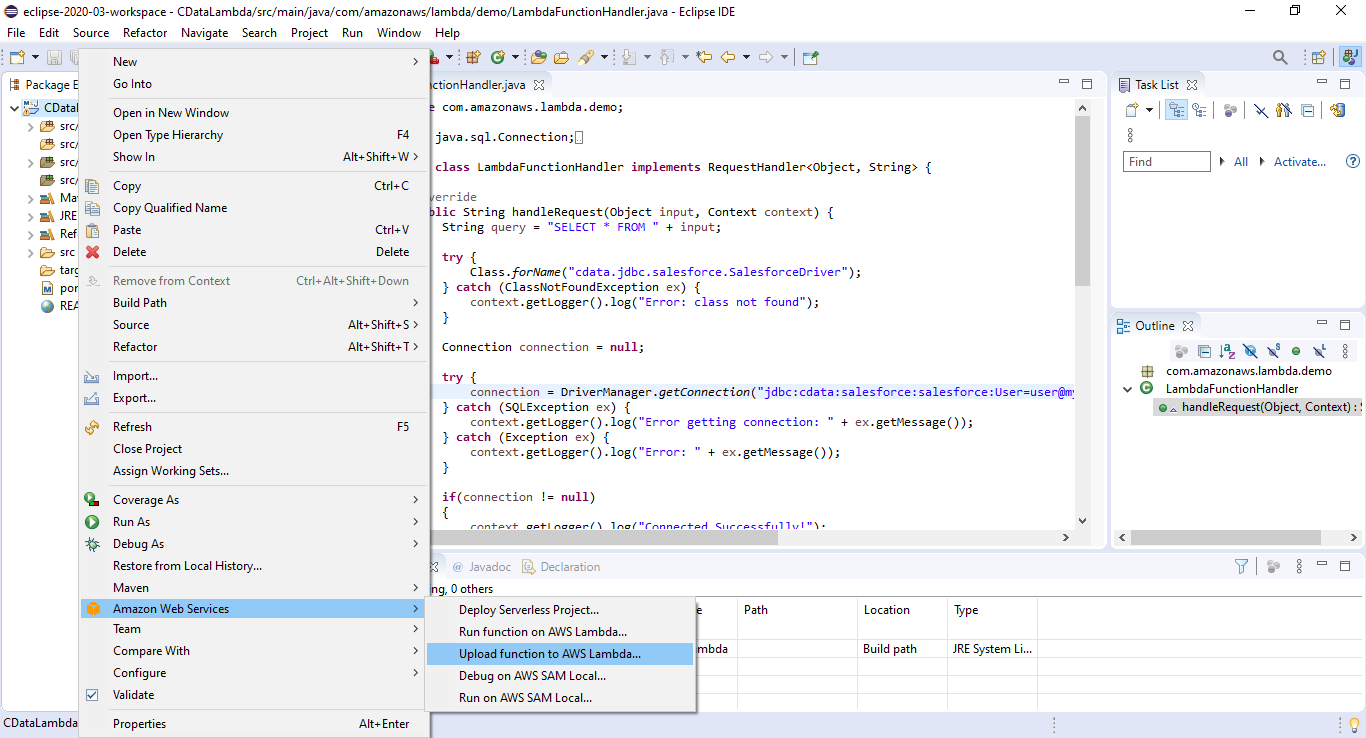

Deploy and Run the Lambda Function

Once you build the function in Eclipse, you are ready to upload and run the function. In this article, the output is written to the AWS logs, but you can use this is a template to implement you own custom business logic to work with Kafka data in AWS Lambda functions.

- Right-click the Package and select Amazon Web Services -> Upload function to AWS Lamba.

![Uploading the function to AWS Lambda]()

- Name the function, select an IAM role, and set the timeout value to a high enough value to ensure the function completes (depending on the result size of your query).

- Right-click the Package and select Amazon Web Services -> Run function on AWS Lambda and set the input to the name of the Kafka object you wish to query (i.e. "SampleTable_1").

![Entering the table name as input]()

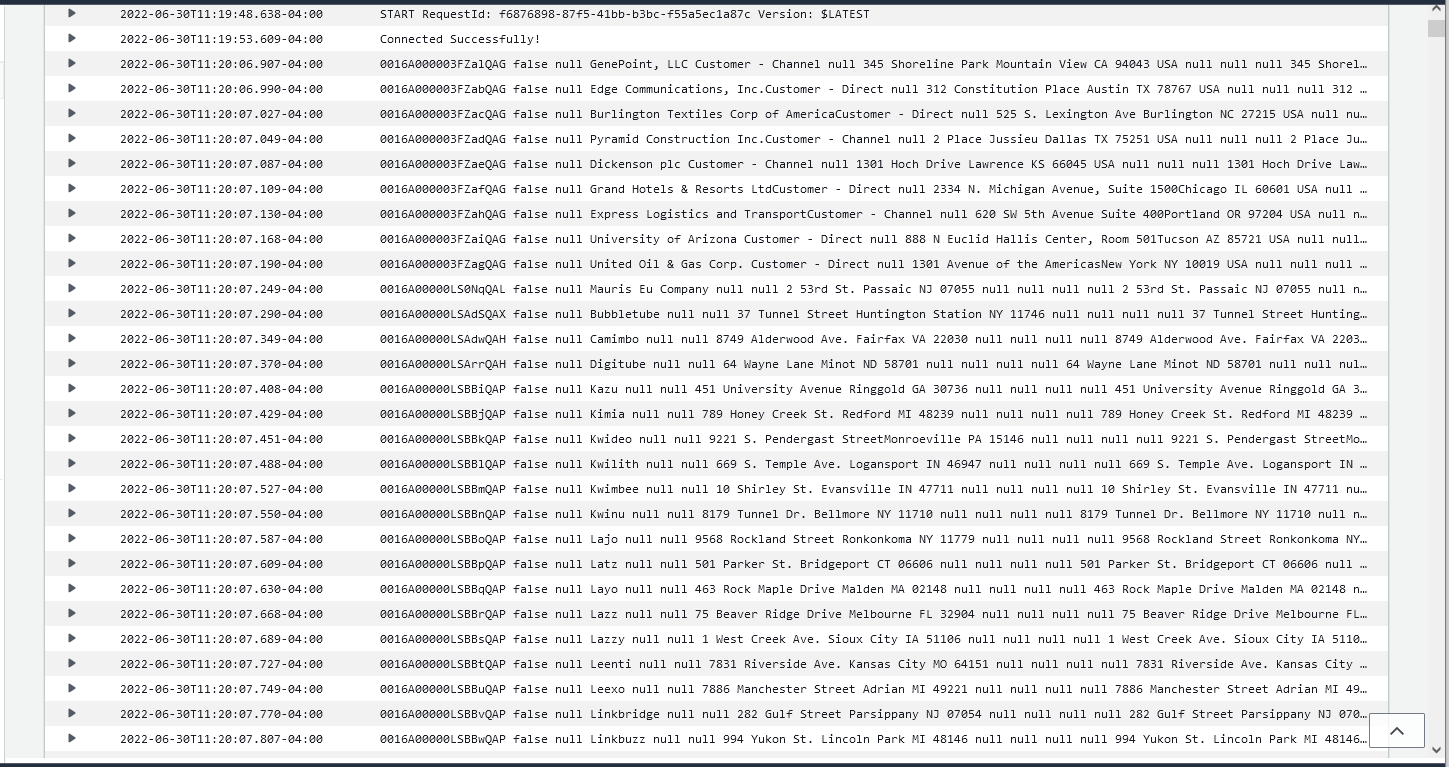

- After the job runs, you can view the output in the CloudWatch logs.

![The data in AWS CloudWatch (Salesforce is shown).]()

Free Trial & More Information

Download a free, 30-day trial of the CData JDBC Driver for Apache Kafka and start working with your live Kafka data in AWS Lambda. Reach out to our Support Team if you have any questions.