Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Google BigQuery: Million Row Challenge

Use the CData JDBC Driver to upload one million rows into Google BigQuery in just over twenty minutes --

a task that is not possible with the Google-supported drivers.

The metrics in this article were found using the most up-to-date drivers available as of October 2017. Find new performance metrics in our updated article.

Unmatched Performance With BigQuery Data Uploads

As outlined in several other articles, our drivers are industry leaders when it comes to performance. Our developers have spent countless hours optimizing the performance in processing data for reads and writes, ensuring that our drivers are not the bottleneck that limits your ability to succeed.

In the context of Google BigQuery, we are able to upload one million rows into a table in just over twenty minutes. According to the Google BigQuery documentation, the drivers available from Google "leverage the query interface for BigQuery" and do not "leverage BigQuery's large scale ingestion mechanisms or export functionality." While you can "issue small volumes of INSERT requests", any attempts to load large data sets cause you to hit the rate limits for the BigQuery Data Manipulation Language.

The Test

To reproduce our results, you need only download a trial of the CData JDBC Driver for Google BigQuery and configure a BigQuery table. From there, you are prepared to experience the CData difference by loading data faster than with any other driver. For this article, we used a simple, custom Java application. Thanks to the breadth of our offerings, you have connectivity to many other tools, like Excel, SSIS, and PowerShell, and including any tools that offer native support for JDBC, ODBC, and ADO.NET.

- Configure a BigQuery table. For our sample, we modeled data after the trips table from the yellow dataset in the public nyc-tlc project (https://cloud.google.com/bigquery/public-data/nyc-tlc-trips).

- Download a free trial of the CData JDBC Driver for Google BigQuery.

- Download a CSV file with sample data.

- Create a simple application to batch the data from the CSV file and load each batch into Google BigQuery. Our application made use of the addBatch() method for a PreparedStatement to maximize the size of each batch (and therefore minimize the number of calls made to Google BigQuery).

Sample Code

//one batch Connection connection = DriverManager.getConnection("jdbc:googlebigquery:InitiateOAuth=GETANDREFRESH;QueryPassthrough=false;ProjectId=" + projectId + ";DatasetId=" + datasetId + ";Timeout=240;UseLegacySQL=false;"); String cmd = "INSERT INTO TestDataset2.nyc_yellow_trips_copy (vendor_id, pickup_datetime, dropoff_datetime, pickup_longitude, pickup_latitude, dropoff_longitude, dropoff_latitude, rate_code, passenger_count, trip_distance, payment_type, fare_amount, extra, mta_tax, imp_surcharge, tip_amount, tolls_amount, total_amount, store_and_fwd_flag) VALUES (?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?,?)"; PreparedStatement pstmt = connection.prepareStatement(cmd); for (int row = 0; row < rows.length; row++){ String[] line = rows[row].split(","); for (int i = 0 ; i < line.length; i++) { //add parameters based on datatype if ( (i < 3) || (i ==7) || (i==10) || (i==18) ) { pstmt.setString(i + 1, line[i]); } else if (i == 8) { pstmt.setInt(i+1, Integer.parseInt(line[i])); } else { pstmt.setDouble(i+1, Double.parseDouble(line[i])); } } pstmt.addBatch(); } int[] affected = pstmt.executeBatch(); - Execute the application/workflow.

The Results

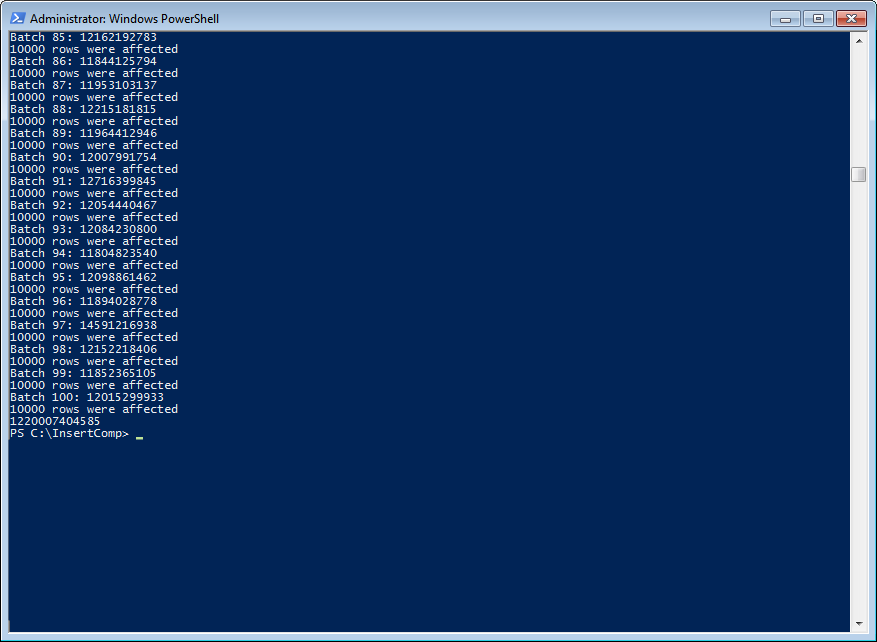

Below, you can see the results of loading one million rows in 100 batches (the maximum number of rows per batch is 10,000), printing the number of nanoseconds for each batch, along with the total number of nanoseconds required to insert the entire set (approximately 20 minutes, 20 seconds):

More Information & Free Trials

The ability to bulk upload of millions of records of data to Google BigQuery (and do so quickly!) is just one of the benefits of using the CData drivers. With our richly featured, high-performance drivers, you get a single, uniform experience with all of your data, no matter where it is (cloud-based or on-premises, SaaS or application-based, NoSQL or RDBMS), backed by a world-class Support Team. Download a free, 30 day trial of any of our Google BigQuery drivers and experience the CData difference today.