Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Access Live Spark Data in Coginiti Pro

Connect to and query live Spark data from the GUI in the Coginiti Pro: SQL Analytics Tool.

Coginiti Pro is a single tool for all your SQL data and analytics needs, designed specifically for data engineers, analysts, and data scientists. When paired with the CData JDBC Driver for Apache Spark, Coginiti Pro can access and query live Spark data. This article describes how to connect to and query Spark data from Coginiti Pro.

With built-in optimized data processing, the CData JDBC Driver for Apache Spark offers unmatched performance for interacting with live Spark data. When you issue complex SQL queries to Spark, the driver pushes supported SQL operations, like filters and aggregations, directly to Spark and utilizes the embedded SQL engine to process unsupported operations client-side (often SQL functions and JOIN operations). In addition, its built-in dynamic metadata querying allows you to work with and analyze Spark data using native data types.

Gather Connection Properties and Build a Connection String

Download the CData JDBC Driver for Apache Spark installer, unzip the package, and run the JAR file to install the driver. Then gather the required connection properties.

Set the Server, Database, User, and Password connection properties to connect to SparkSQL.

NOTE: To use the JDBC driver in Coginiti Pro, you may need a license (full or trial) and a Runtime Key (RTK). For more information on obtaining this license (or a trial), contact our sales team.

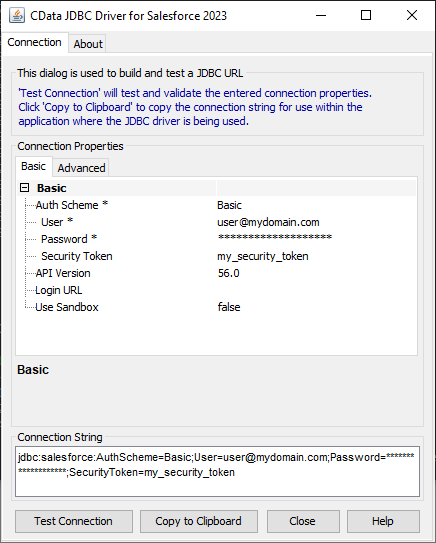

Built-in Connection String Designer

For assistance constructing the JDBC URL, use the connection string designer built into the Spark JDBC Driver. Double-click the JAR file or execute the jar file from the command line.

java -jar cdata.jdbc.sparksql.jar

Fill in the connection properties (including the RTK) and copy the connection string to the clipboard.

Create a JDBC Data Source for Spark Data

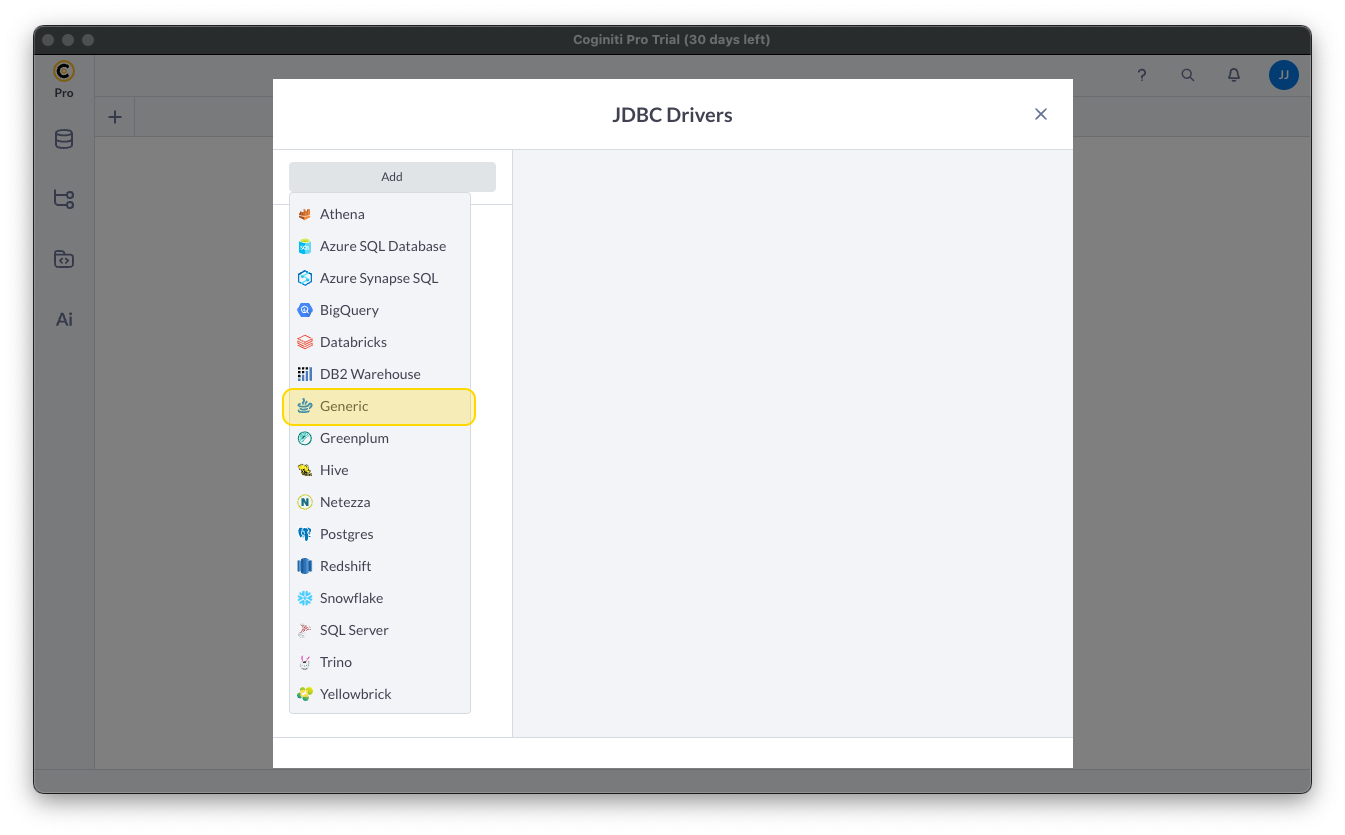

- Open Coginiti Pro and in the File menu, select "Edit Drivers."

- In the newly opened wizard, click "Add" and select "Generic."

![Adding a Generic JDBC Driver.]()

In the "JDBC Drivers" wizard, set the driver properties (below) and click "Create Driver."

- Set JDBC Driver Name to a useful name, like CData JDBC Driver for Spark.

- Click "Add Files" to add the JAR file from the "lib" folder in the installation directory (e.g. cdata.jdbc.sparksql.jar)

- Select the Class Name: cdata.jdbc.sparksql.SparkSQLDriver.

![Creating a new Generic data source (Salesforce is shown).]()

Create a Connection using the CData JDBC Driver for Apache Spark

- In the File menu, click "Edit Connections."

- In the newly opened wizard, click "Add" and select "Generic."

![Adding a Generic JDBC Connection.]()

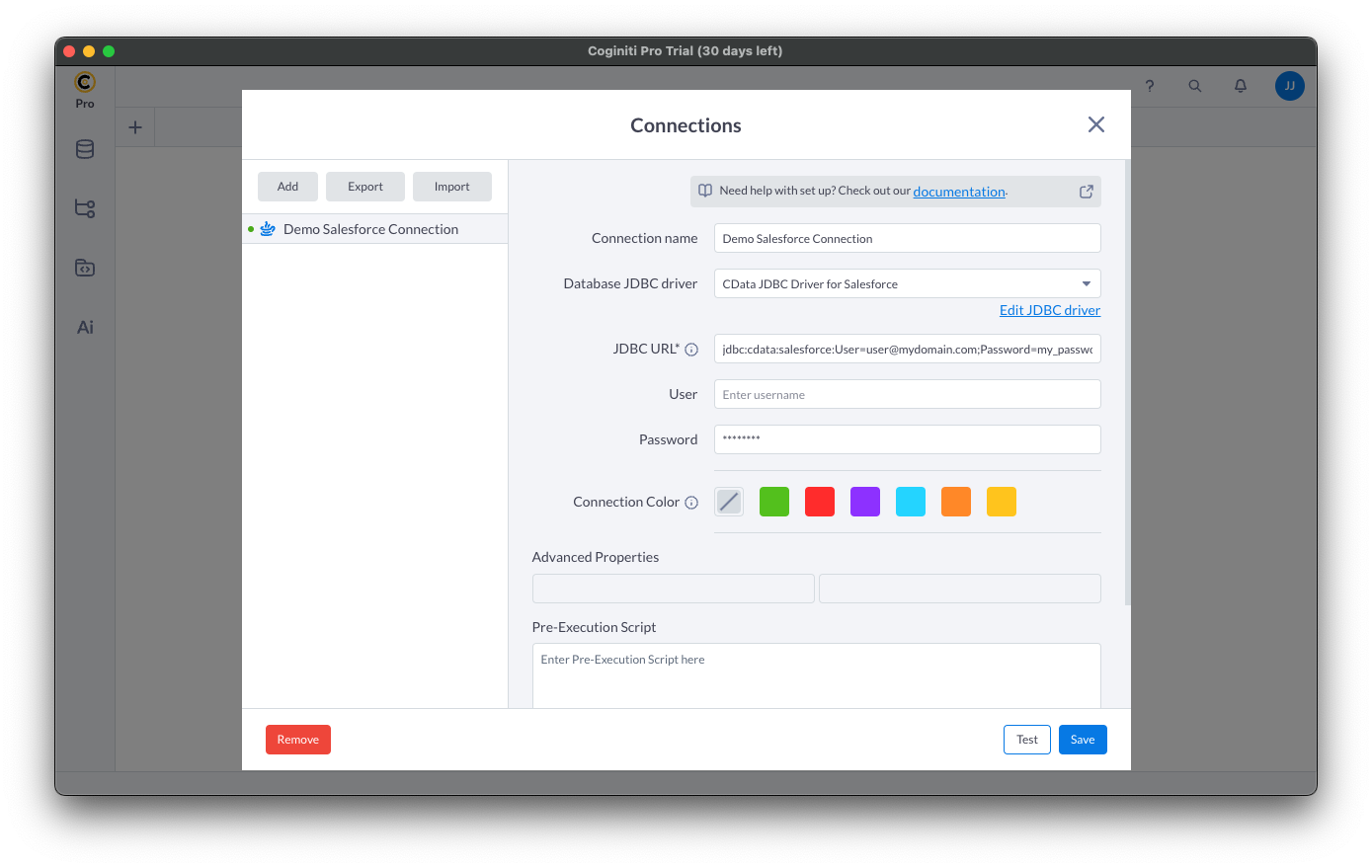

- In the "Connections" wizard, set the connection properties.

- Set Connection name to an identifying name.

- Set Database JDBC driver to the Driver you configured earlier.

- Set JDBC URL to the JDBC URL configured using the built-in connection string designer (e.g. jdbc:sparksql:Server=127.0.0.1;

![Creating a connection (Salesforce is shown).]()

- Click "Test" to ensure the connection is configured properly. Click "Save."

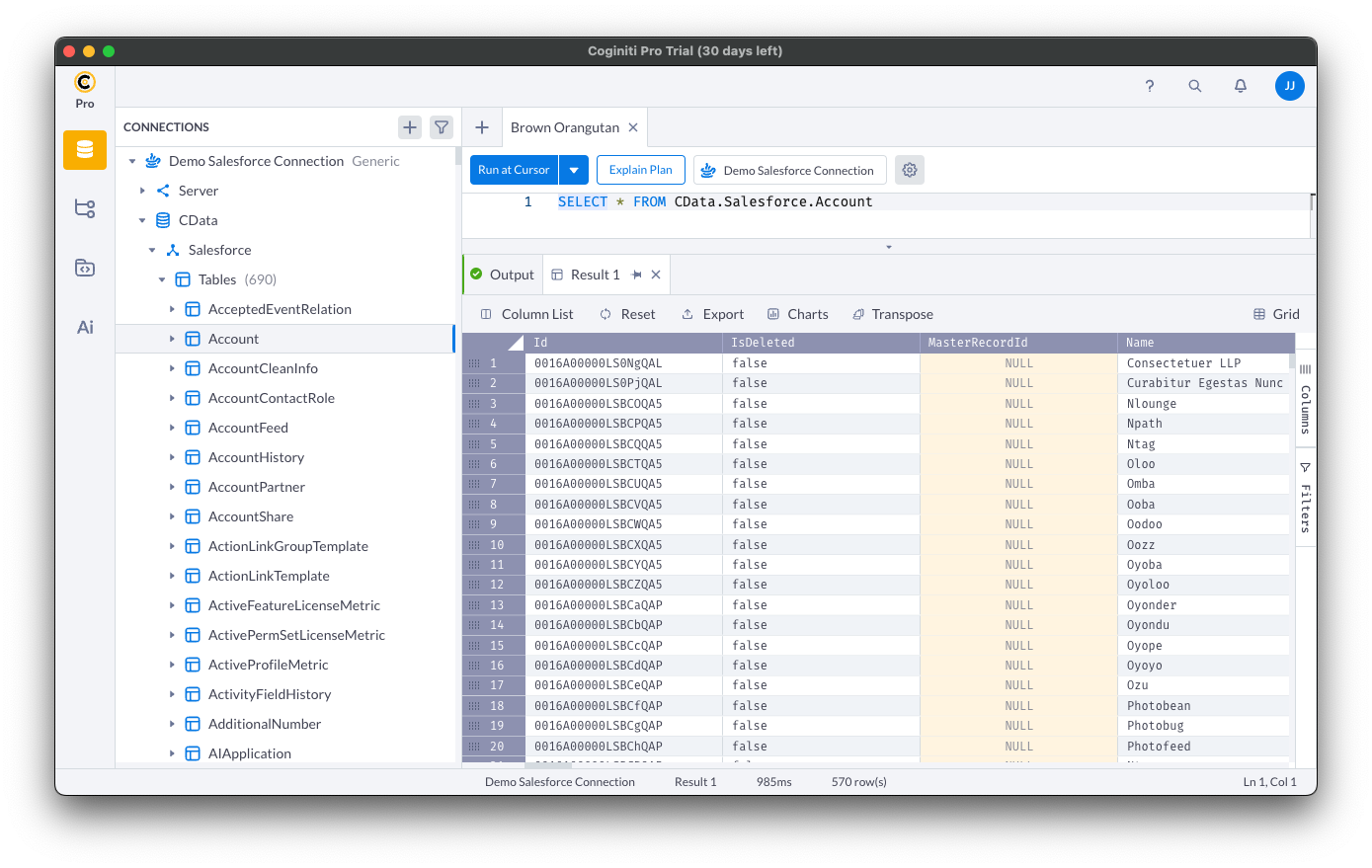

Query Spark Using SQL

- Open the Connections tab by clicking on database icon: .

- Click the plus sign () to add a new query tab.

Once the query console is open, write the SQL script you wish to execute and click "Run at Cursor".

NOTE: You can use the explorer on the left to determine table/view names and column names.

Using the explorer

- In the "Select connection" field, select the connection you wish to query.

- Expand your newly created connection, expand the "CData" catalog, and expand the Spark catalog.

- Expand "Tables" or "Views" to find the entity you wish to query.

- Expand your selected entity to explore the fields (columns).

![Querying data (Salesforce is shown).]()

Free Trial & More Information

Download a free, 30-day trial of the CData JDBC Driver for Apache Spark and start working with your live Spark data in Coginiti Pro. Reach out to our Support Team if you have any questions.