Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Parquet to Databricks Data Integration

The leading hybrid-cloud solution for Databricks integration. Automated continuous ETL/ELT data replication from Parquet to Databricks.

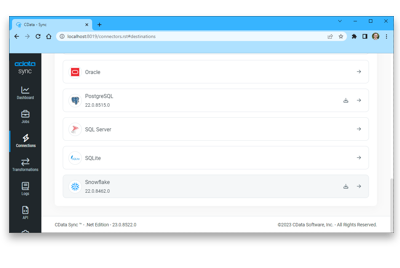

Whether you're managing operational reporting, connecting data for analytics, or ensuring disaster recovery, CData Sync's no-code approach to data integration simplifies the process of putting Parquet data to work.

Start the Product Tour Try it Free

Parquet:

Apache Parquet is a columnar storage file format designed for efficient data storage and processing in big data environments. It supports complex nested data structures and provides high compression ratios, making it ideal for analytics workloads. Parquet is widely used in Apache Hadoop and Spark ecosystems for fast and scalable data processing.

Databricks:

Databricks is a unified data analytics platform that allows organizations to easily process, analyze, and visualize large amounts of data. It combines data engineering, data science, and machine learning capabilities in a single platform, making it easier for teams to collaborate and derive insights from their data.

Integrate Parquet and Databricks with CData Sync

CData Sync provides a straightforward way to continuously pipeline your Apache Parquet data to any database, data lake, or data warehouse, making it easily available to analytics, reporting, AI, and machine learning.

- Synchronize data with a wide range of traditional and emerging databases including Databricks.

- Replicate Parquet data to database's and data warehouse systems to facilitate operational reporting, BI, and analytics.

- Offload queries from Parquet to reduce load and increase performance.

- Connect Parquet to business analytics for BI and decision support.

- Archive Apache Parquet data for disaster recovery.

Integrate Parquet with Databricks

Parquet Data Integration Features

Simple no-code Parquet data integration

Ditch the code and complex setups to move more data in less time. Connect Parquet to any destination with drag-and-drop ease.

Hassle-free data pipelines in minutes

Incremental updates and automatic schema replication eliminate the headaches of Parquet data integration, ensuring Databricks always has the latest data.

Don't pay for every row

Replicate all the data that matters with predictable, transparent pricing. Unlimited replication between Parquet and Databricks.