Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →Analyze Azure Data Lake Storage Data in R

Use standard R functions and the development environment of your choice to analyze Azure Data Lake Storage data with the CData JDBC Driver for Azure Data Lake Storage.

Access Azure Data Lake Storage data with pure R script and standard SQL on any machine where R and Java can be installed. You can use the CData JDBC Driver for Azure Data Lake Storage and the RJDBC package to work with remote Azure Data Lake Storage data in R. By using the CData Driver, you are leveraging a driver written for industry-proven standards to access your data in the popular, open-source R language. This article shows how to use the driver to execute SQL queries to Azure Data Lake Storage and visualize Azure Data Lake Storage data by calling standard R functions.

Install R

You can match the driver's performance gains from multi-threading and managed code by running the multithreaded Microsoft R Open or by running open R linked with the BLAS/LAPACK libraries. This article uses Microsoft R Open 3.2.3, which is preconfigured to install packages from the Jan. 1, 2016 snapshot of the CRAN repository. This snapshot ensures reproducibility.

Load the RJDBC Package

To use the driver, download the RJDBC package. After installing the RJDBC package, the following line loads the package:

library(RJDBC)

Connect to Azure Data Lake Storage as a JDBC Data Source

You will need the following information to connect to Azure Data Lake Storage as a JDBC data source:

- Driver Class: Set this to cdata.jdbc.adls.ADLSDriver

- Classpath: Set this to the location of the driver JAR. By default this is the lib subfolder of the installation folder.

The DBI functions, such as dbConnect and dbSendQuery, provide a unified interface for writing data access code in R. Use the following line to initialize a DBI driver that can make JDBC requests to the CData JDBC Driver for Azure Data Lake Storage:

driver <- JDBC(driverClass = "cdata.jdbc.adls.ADLSDriver", classPath = "MyInstallationDir\lib\cdata.jdbc.adls.jar", identifier.quote = "'")

You can now use DBI functions to connect to Azure Data Lake Storage and execute SQL queries. Initialize the JDBC connection with the dbConnect function.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

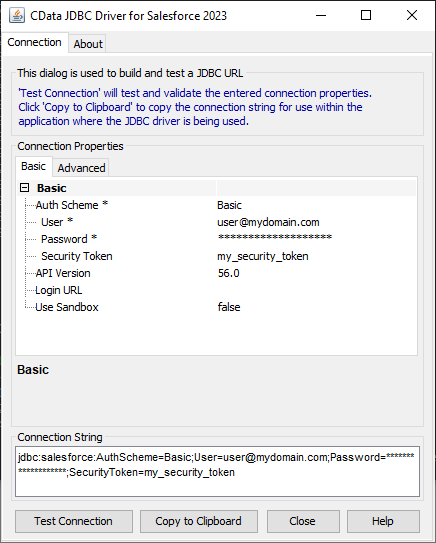

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Azure Data Lake Storage JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.adls.jar

Fill in the connection properties and copy the connection string to the clipboard.

Below is a sample dbConnect call, including a typical JDBC connection string:

conn <- dbConnect(driver,"jdbc:adls:Schema=ADLSGen2;Account=myAccount;FileSystem=myFileSystem;AccessKey=myAccessKey;InitiateOAuth=GETANDREFRESH")

Schema Discovery

The driver models Azure Data Lake Storage APIs as relational tables, views, and stored procedures. Use the following line to retrieve the list of tables:

dbListTables(conn)

Execute SQL Queries

You can use the dbGetQuery function to execute any SQL query supported by the Azure Data Lake Storage API:

resources <- dbGetQuery(conn,"SELECT FullPath, Permission FROM Resources WHERE Type = 'FILE'")

You can view the results in a data viewer window with the following command:

View(resources)

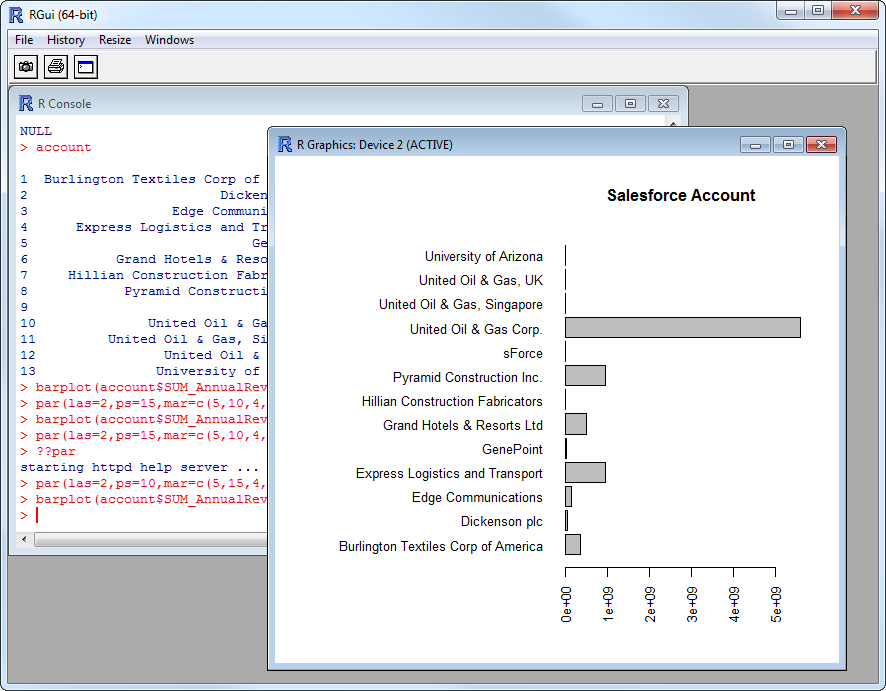

Plot Azure Data Lake Storage Data

You can now analyze Azure Data Lake Storage data with any of the data visualization packages available in the CRAN repository. You can create simple bar plots with the built-in bar plot function:

par(las=2,ps=10,mar=c(5,15,4,2))

barplot(resources$Permission, main="Azure Data Lake Storage Resources", names.arg = resources$FullPath, horiz=TRUE)