Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →How to work with AlloyDB Data in Apache Spark using SQL

Access and process AlloyDB Data in Apache Spark using the CData JDBC Driver.

Apache Spark is a fast and general engine for large-scale data processing. When paired with the CData JDBC Driver for AlloyDB, Spark can work with live AlloyDB data. This article describes how to connect to and query AlloyDB data from a Spark shell.

The CData JDBC Driver offers unmatched performance for interacting with live AlloyDB data due to optimized data processing built into the driver. When you issue complex SQL queries to AlloyDB, the driver pushes supported SQL operations, like filters and aggregations, directly to AlloyDB and utilizes the embedded SQL engine to process unsupported operations (often SQL functions and JOIN operations) client-side. With built-in dynamic metadata querying, you can work with and analyze AlloyDB data using native data types.

Install the CData JDBC Driver for AlloyDB

Download the CData JDBC Driver for AlloyDB installer, unzip the package, and run the JAR file to install the driver.

Start a Spark Shell and Connect to AlloyDB Data

- Open a terminal and start the Spark shell with the CData JDBC Driver for AlloyDB JAR file as the jars parameter:

$ spark-shell --jars /CData/CData JDBC Driver for AlloyDB/lib/cdata.jdbc.alloydb.jar - With the shell running, you can connect to AlloyDB with a JDBC URL and use the SQL Context load() function to read a table.

The following connection properties are usually required in order to connect to AlloyDB.

- Server: The host name or IP of the server hosting the AlloyDB database.

- User: The user which will be used to authenticate with the AlloyDB server.

- Password: The password which will be used to authenticate with the AlloyDB server.

You can also optionally set the following:

- Database: The database to connect to when connecting to the AlloyDB Server. If this is not set, the user's default database will be used.

- Port: The port of the server hosting the AlloyDB database. This property is set to 5432 by default.

Authenticating with Standard Authentication

Standard authentication (using the user/password combination supplied earlier) is the default form of authentication.

No further action is required to leverage Standard Authentication to connect.

Authenticating with pg_hba.conf Auth Schemes

There are additional methods of authentication available which must be enabled in the pg_hba.conf file on the AlloyDB server.

Find instructions about authentication setup on the AlloyDB Server here.

Authenticating with MD5 Authentication

This authentication method must be enabled by setting the auth-method in the pg_hba.conf file to md5.

Authenticating with SASL Authentication

This authentication method must be enabled by setting the auth-method in the pg_hba.conf file to scram-sha-256.

Authenticating with Kerberos

The authentication with Kerberos is initiated by AlloyDB Server when the ∏ is trying to connect to it. You should set up Kerberos on the AlloyDB Server to activate this authentication method. Once you have Kerberos authentication set up on the AlloyDB Server, see the Kerberos section of the help documentation for details on how to authenticate with Kerberos.

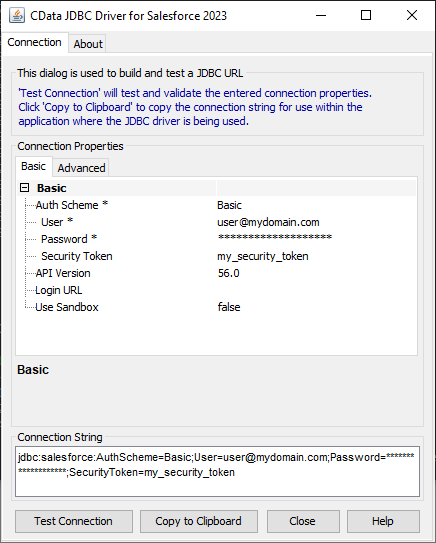

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the AlloyDB JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.alloydb.jarFill in the connection properties and copy the connection string to the clipboard.

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

Configure the connection to AlloyDB, using the connection string generated above.

scala> val alloydb_df = spark.sqlContext.read.format("jdbc").option("url", "jdbc:alloydb:User=alloydb;Password=admin;Database=alloydb;Server=127.0.0.1;Port=5432").option("dbtable","Orders").option("driver","cdata.jdbc.alloydb.AlloyDBDriver").load() - Once you connect and the data is loaded you will see the table schema displayed.

Register the AlloyDB data as a temporary table:

scala> alloydb_df.registerTable("orders")-

Perform custom SQL queries against the Data using commands like the one below:

scala> alloydb_df.sqlContext.sql("SELECT ShipName, ShipCity FROM Orders WHERE ShipCountry = USA").collect.foreach(println)You will see the results displayed in the console, similar to the following:

![Data in Apache Spark (Salesforce is shown)]()

Using the CData JDBC Driver for AlloyDB in Apache Spark, you are able to perform fast and complex analytics on AlloyDB data, combining the power and utility of Spark with your data. Download a free, 30 day trial of any of the 200+ CData JDBC Drivers and get started today.