Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →How to work with JDBC-ODBC Bridge Data in Apache Spark using SQL

Access and process JDBC-ODBC Bridge Data in Apache Spark using the CData JDBC Driver.

Apache Spark is a fast and general engine for large-scale data processing. When paired with the CData JDBC Driver for JDBC-ODBC Bridge, Spark can work with live JDBC-ODBC Bridge data. This article describes how to connect to and query JDBC-ODBC Bridge data from a Spark shell.

The CData JDBC Driver offers unmatched performance for interacting with live JDBC-ODBC Bridge data due to optimized data processing built into the driver. When you issue complex SQL queries to JDBC-ODBC Bridge, the driver pushes supported SQL operations, like filters and aggregations, directly to JDBC-ODBC Bridge and utilizes the embedded SQL engine to process unsupported operations (often SQL functions and JOIN operations) client-side. With built-in dynamic metadata querying, you can work with and analyze JDBC-ODBC Bridge data using native data types.

Install the CData JDBC Driver for JDBC-ODBC Bridge

Download the CData JDBC Driver for JDBC-ODBC Bridge installer, unzip the package, and run the JAR file to install the driver.

Start a Spark Shell and Connect to JDBC-ODBC Bridge Data

- Open a terminal and start the Spark shell with the CData JDBC Driver for JDBC-ODBC Bridge JAR file as the jars parameter:

$ spark-shell --jars /CData/CData JDBC Driver for JDBC-ODBC Bridge/lib/cdata.jdbc.jdbcodbc.jar - With the shell running, you can connect to JDBC-ODBC Bridge with a JDBC URL and use the SQL Context load() function to read a table.

To connect to an ODBC data source, specify either the DSN (data source name) or specify an ODBC connection string: Set Driver and the connection properties for your ODBC driver.

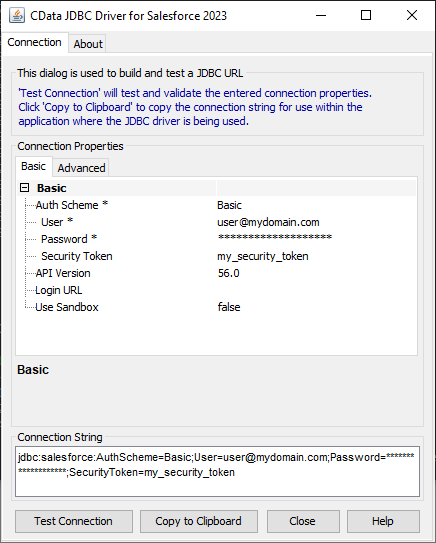

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the JDBC-ODBC Bridge JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.jdbcodbc.jarFill in the connection properties and copy the connection string to the clipboard.

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

Configure the connection to JDBC-ODBC Bridge, using the connection string generated above.

scala> val jdbcodbc_df = spark.sqlContext.read.format("jdbc").option("url", "jdbc:jdbcodbc:Driver={ODBC_Driver_Name};Driver_Property1=Driver_Value1;Driver_Property2=Driver_Value2;...").option("dbtable","Account").option("driver","cdata.jdbc.jdbcodbc.JDBCODBCDriver").load() - Once you connect and the data is loaded you will see the table schema displayed.

Register the JDBC-ODBC Bridge data as a temporary table:

scala> jdbcodbc_df.registerTable("account")-

Perform custom SQL queries against the Data using commands like the one below:

scala> jdbcodbc_df.sqlContext.sql("SELECT Id, Name FROM Account WHERE Id = 1").collect.foreach(println)You will see the results displayed in the console, similar to the following:

![Data in Apache Spark (Salesforce is shown)]()

Using the CData JDBC Driver for JDBC-ODBC Bridge in Apache Spark, you are able to perform fast and complex analytics on JDBC-ODBC Bridge data, combining the power and utility of Spark with your data. Download a free, 30 day trial of any of the 200+ CData JDBC Drivers and get started today.