Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →ETL Azure Data Lake Storage in Oracle Data Integrator

This article shows how to transfer Azure Data Lake Storage data into a data warehouse using Oracle Data Integrator.

Leverage existing skills by using the JDBC standard to connect to Azure Data Lake Storage: Through drop-in integration into ETL tools like Oracle Data Integrator (ODI), the CData JDBC Driver for Azure Data Lake Storage connects real-time Azure Data Lake Storage data to your data warehouse, business intelligence, and Big Data technologies.

JDBC connectivity enables you to work with Azure Data Lake Storage just as you would any other database in ODI. As with an RDBMS, you can use the driver to connect directly to the Azure Data Lake Storage APIs in real time instead of working with flat files.

This article walks through a JDBC-based ETL -- Azure Data Lake Storage to Oracle. After reverse engineering a data model of Azure Data Lake Storage entities, you will create a mapping and select a data loading strategy -- since the driver supports SQL-92, this last step can easily be accomplished by selecting the built-in SQL to SQL Loading Knowledge Module.

Install the Driver

To install the driver, copy the driver JAR (cdata.jdbc.adls.jar) and .lic file (cdata.jdbc.adls.lic), located in the installation folder, into the ODI appropriate directory:

- UNIX/Linux without Agent: ~/.odi/oracledi/userlib

- UNIX/Linux with Agent: ~/.odi/oracledi/userlib and $ODI_HOME/odi/agent/lib

- Windows without Agent: %APPDATA%\Roaming\odi\oracledi\userlib

- Windows with Agent: %APPDATA%\odi\oracledi\userlib and %APPDATA%\odi\agent\lib

Restart ODI to complete the installation.

Reverse Engineer a Model

Reverse engineering the model retrieves metadata about the driver's relational view of Azure Data Lake Storage data. After reverse engineering, you can query real-time Azure Data Lake Storage data and create mappings based on Azure Data Lake Storage tables.

-

In ODI, connect to your repository and click New -> Model and Topology Objects.

![Create a New Model]()

- On the Model screen of the resulting dialog, enter the following information:

- Name: Enter ADLS.

- Technology: Select Generic SQL (for ODI Version 12.2+, select Microsoft SQL Server).

- Logical Schema: Enter ADLS.

- Context: Select Global.

![Configuring the Model]()

- On the Data Server screen of the resulting dialog, enter the following information:

- Name: Enter ADLS.

- Driver List: Select Oracle JDBC Driver.

- Driver: Enter cdata.jdbc.adls.ADLSDriver

- URL: Enter the JDBC URL containing the connection string.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

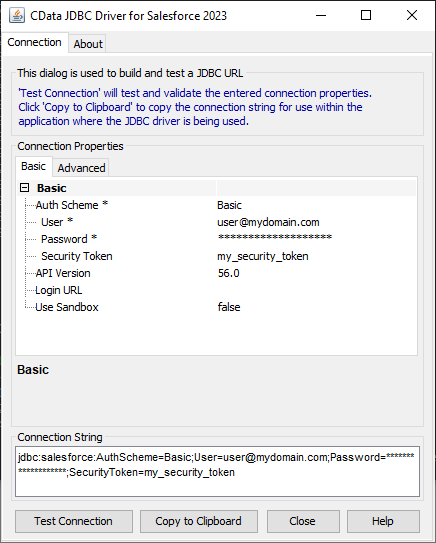

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Azure Data Lake Storage JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.adls.jarFill in the connection properties and copy the connection string to the clipboard.

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

Below is a typical connection string:

jdbc:adls:Schema=ADLSGen2;Account=myAccount;FileSystem=myFileSystem;AccessKey=myAccessKey;InitiateOAuth=GETANDREFRESH

![Configuring the Data Server]()

- On the Physical Schema screen, enter the following information:

- Name: Select from the Drop Down menu.

- Database (Catalog): Enter CData.

- Owner (Schema): If you select a Schema for Azure Data Lake Storage, enter the Schema selected, otherwise enter ADLS.

- Database (Work Catalog): Enter CData.

- Owner (Work Schema): If you select a Schema for Azure Data Lake Storage, enter the Schema selected, otherwise enter ADLS.

![Configuring the Physical Schema]()

- In the opened model click Reverse Engineer to retrieve the metadata for Azure Data Lake Storage tables.

![Reverse Engineer the Model]()

Edit and Save Azure Data Lake Storage Data

After reverse engineering you can now work with Azure Data Lake Storage data in ODI.

To view Azure Data Lake Storage data, expand the Models accordion in the Designer navigator, right-click a table, and click View data.

Create an ETL Project

Follow the steps below to create an ETL from Azure Data Lake Storage. You will load Resources entities into the sample data warehouse included in the ODI Getting Started VM.

Open SQL Developer and connect to your Oracle database. Right-click the node for your database in the Connections pane and click new SQL Worksheet.

Alternatively you can use SQLPlus. From a command prompt enter the following:

sqlplus / as sysdba- Enter the following query to create a new target table in the sample data warehouse, which is in the ODI_DEMO schema. The following query defines a few columns that match the Resources table in Azure Data Lake Storage:

CREATE TABLE ODI_DEMO.TRG_RESOURCES (PERMISSION NUMBER(20,0),FullPath VARCHAR2(255)); - In ODI expand the Models accordion in the Designer navigator and double-click the Sales Administration node in the ODI_DEMO folder. The model is opened in the Model Editor.

- Click Reverse Engineer. The TRG_RESOURCES table is added to the model.

- Right-click the Mappings node in your project and click New Mapping. Enter a name for the mapping and clear the Create Empty Dataset option. The Mapping Editor is displayed.

- Drag the TRG_RESOURCES table from the Sales Administration model onto the mapping.

- Drag the Resources table from the Azure Data Lake Storage model onto the mapping.

- Click the source connector point and drag to the target connector point. The Attribute Matching dialog is displayed. For this example, use the default options. The target expressions are then displayed in the properties for the target columns.

- Open the Physical tab of the Mapping Editor and click RESOURCES_AP in TARGET_GROUP.

- In the RESOURCES_AP properties, select LKM SQL to SQL (Built-In) on the Loading Knowledge Module tab.

![SQL-based access to Azure Data Lake Storage enables you to use standard database-to-database knowledge modules.]()

You can then run the mapping to load Azure Data Lake Storage data into Oracle.