Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Get the Report →How to Work with Azure Data Lake Storage Data in ETL Validator JDBC

Connect to Azure Data Lake Storage from ETL Validator jobs using the CData JDBC Driver.

ETL Validator provides data movement and transformation capabilities for integrating data platforms across your organization. CData's JDBC driver seamlessly integrates with ETL Validator and extends its native connectivity to include Azure Data Lake Storage data.

This tutorial walks through the process of building a simple ETL validator data flow to extract data from Azure Data Lake Storage data and load it into an example data storage solution: SQL Server.

Add a new ETL Validator data source via CData

CData extends ETL Validator's data connectivity capabilities by providing the ability to add data sources that connect via CData's JDBC drivers. Connecting to Azure Data Lake Storage data simply requires creating a new data source in ETL Validator through CData's connectiviy suite as described below.

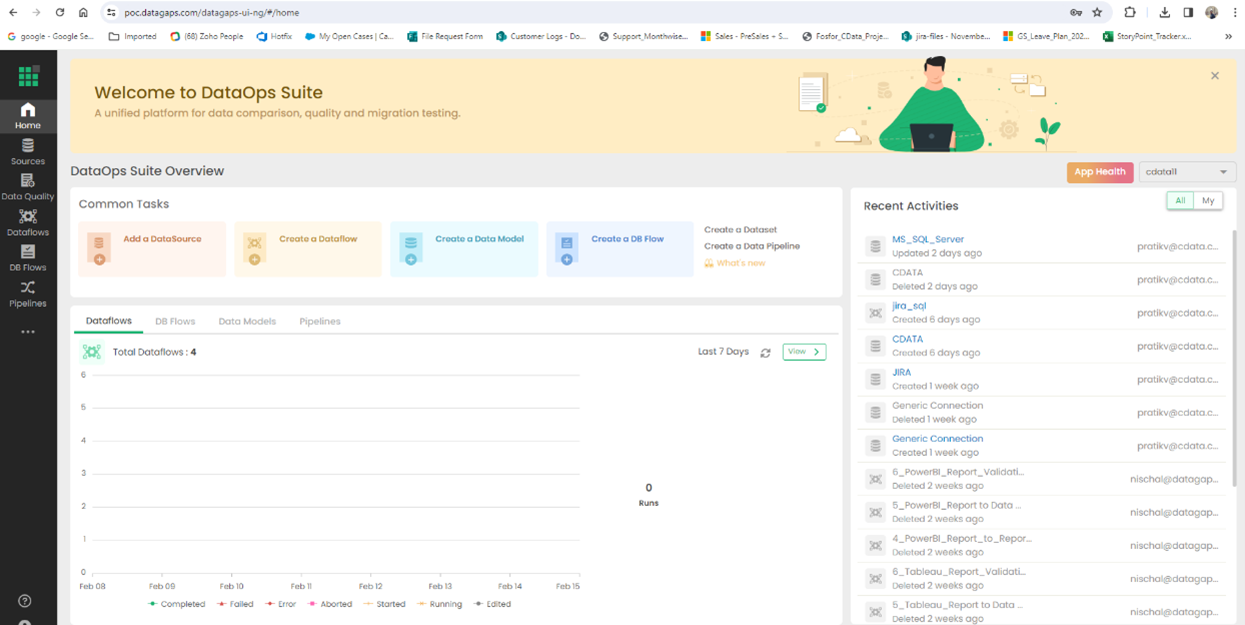

Login to ETL Validator

Begin by logging into ETL Validator to view the application dashboard.

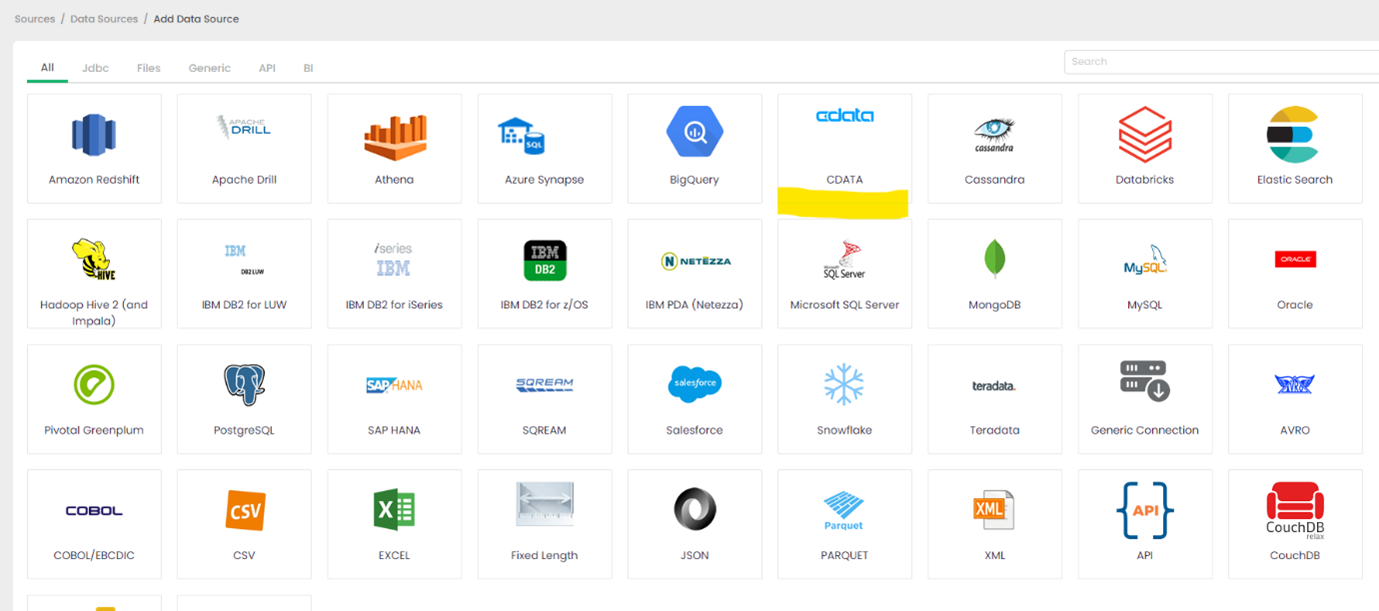

Click on Add a DataSource

CData extends the data source options within ETL Validator.

Click on CData

CData's connectivity is embedded within ETL Validator's data source options.

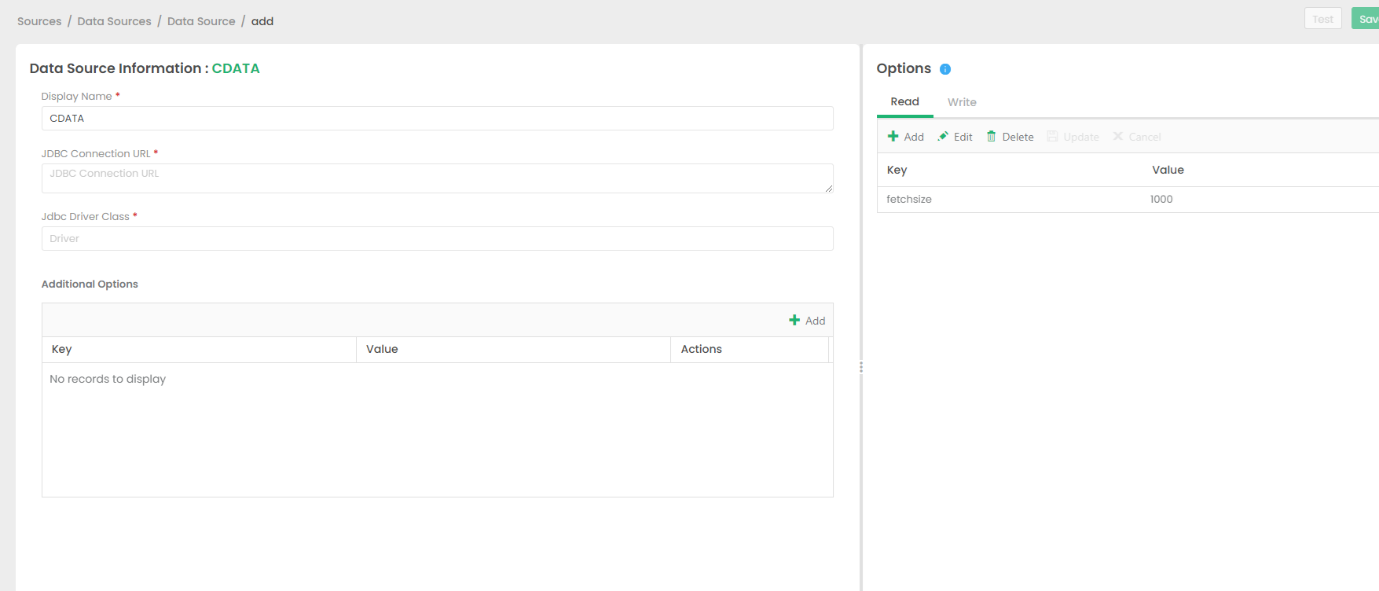

Configure the CData Driver Connection String

You will need a JDBC connection string to establish a connection to Azure Data Lake Storage in ETL Validator.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

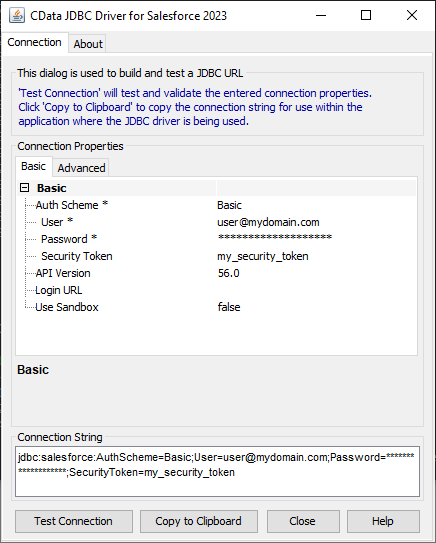

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Azure Data Lake Storage JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.adls.jar

A typical connection string looks like this:

jdbc:adls:Schema=ADLSGen2;Account=myAccount;FileSystem=myFileSystem;AccessKey=myAccessKey;InitiateOAuth=GETANDREFRESH

Licensing the Driver

To ensure the JDBC driver is licensed appropriately, copy the license file to the appropriate location:

Copy the JDBC Driver for Azure Data Lake Storage and lic file from "C:\Program Files\CData[product_name]\lib" to

"C:\Datagaps\ETLValidator\Server\apache-tomcat\bin".

cdata.jdbc.adls.jar

cdata.jdbc.adls.lic

Note: If you do not copy the .lic file with the jar, you will see a licensing error that indicates you do not have a valid license installed. This is true for both the trial and full versions.

Save the connection

Should you encounter any difficulties loading the CData JDBC driver class, please contact DataGap's team, and they will provide you instructions on how to load the jar file for the relevant driver.

Add SQL Server as a Target

This example will use SQL Server as a destination for Azure Data Lake Storage data data, but any preferred destination can be used instead.

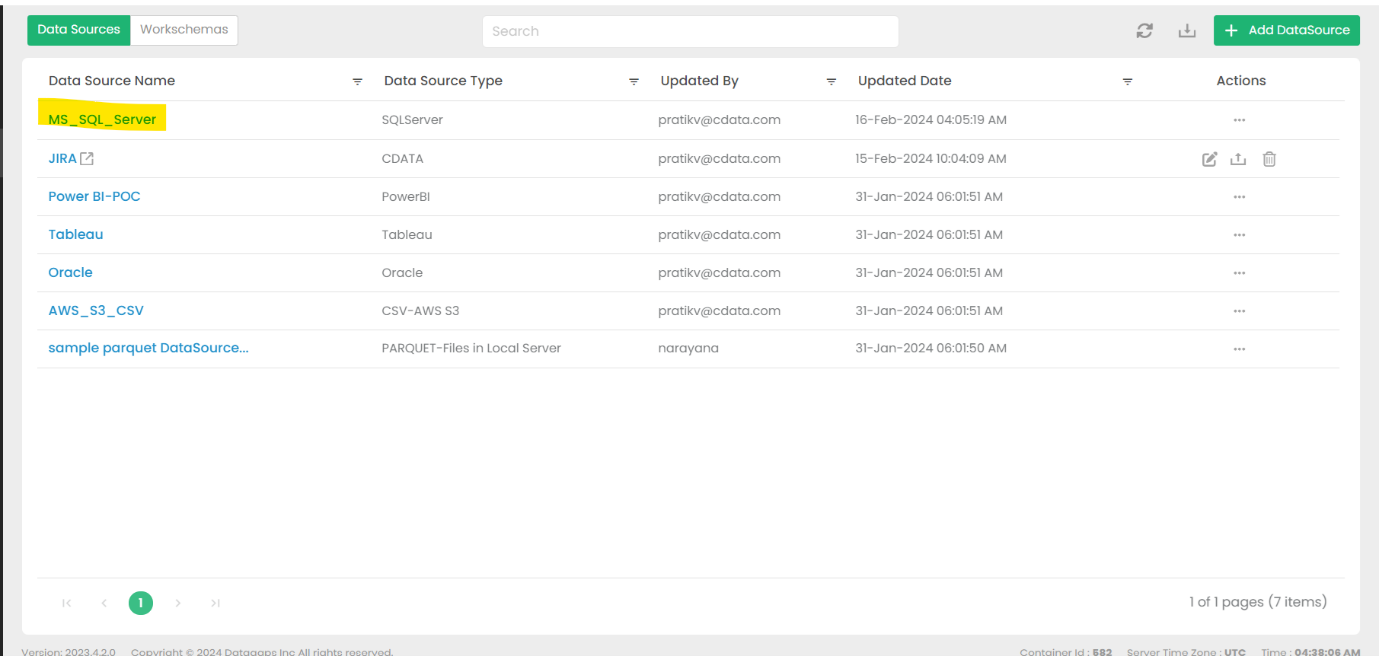

Go to DataSources and select MS_SQL_SERVER

This option is the default.

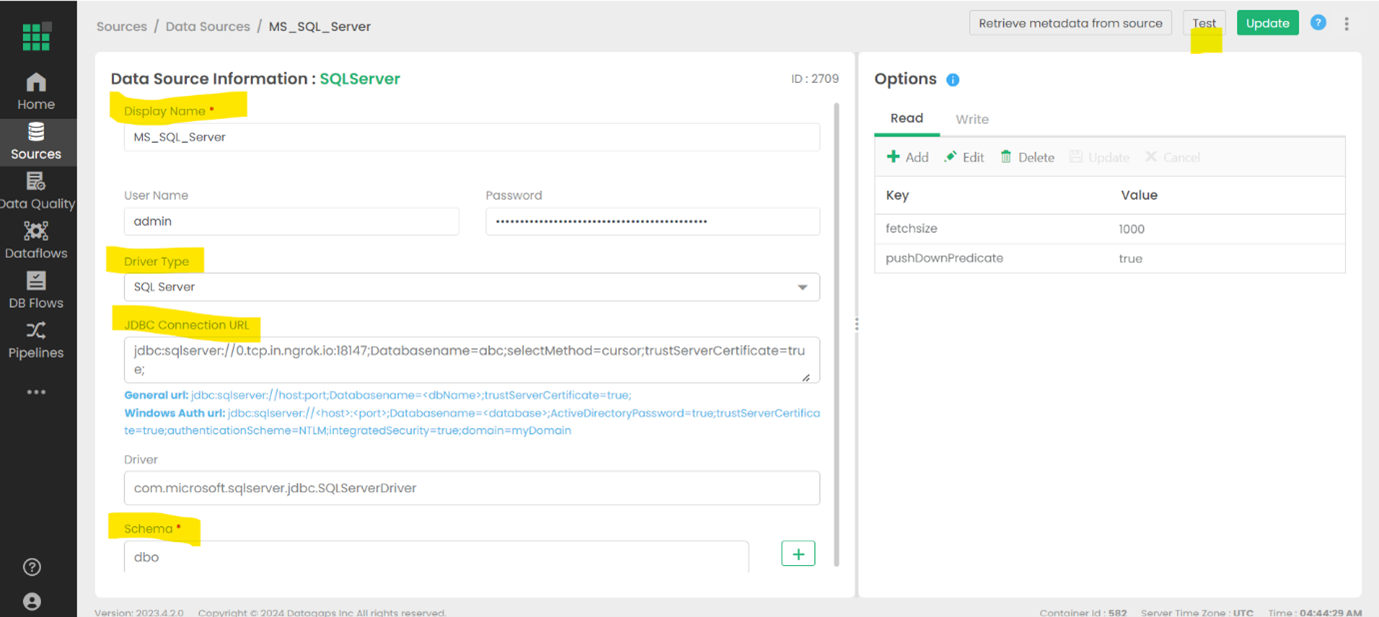

Fill in the necessary connection details and test the connection

The details will depend on the specific target, but these details may include a URL, authentiation credentials, etc.

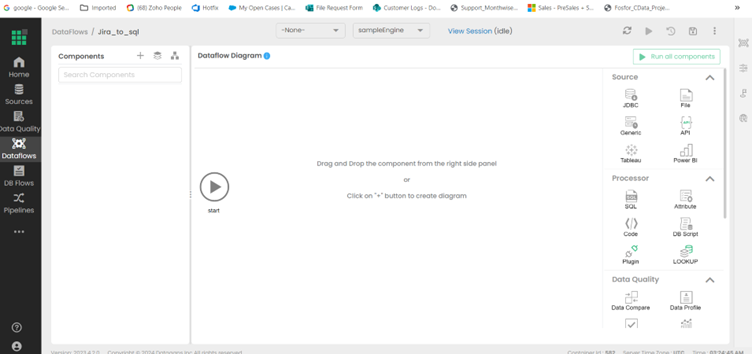

Create a Dataflow in ETL Validator

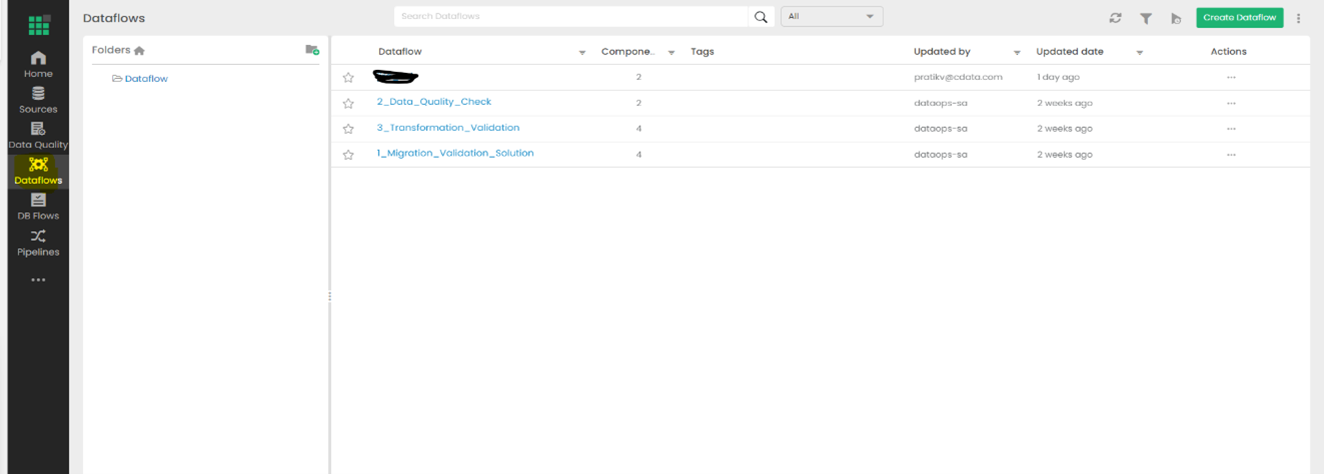

Open the Dataflows tab

Configured data flows will appear in this window.

Select Create Dataflow

Name your new dataflow and save it.

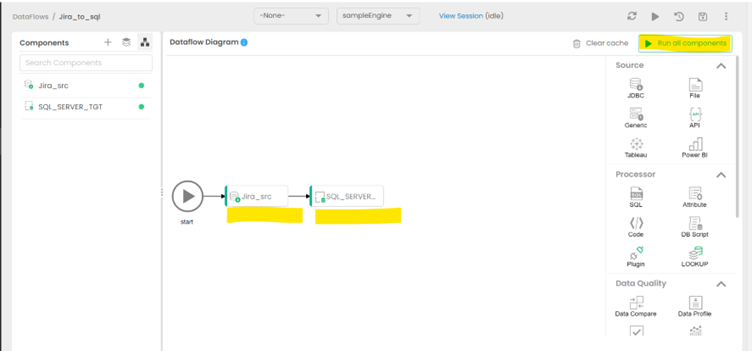

Open the Dataflow to view the Dataflow Diagram

The details of the data movement will be configured in this panel.

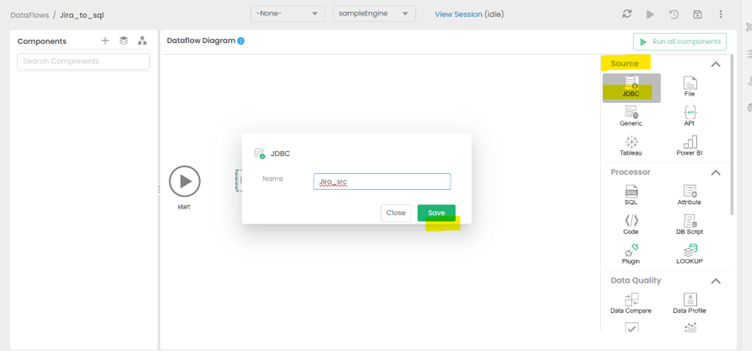

Drag & drop the JDBC as a source from the right side

Give the new source an appropriate name and save it.

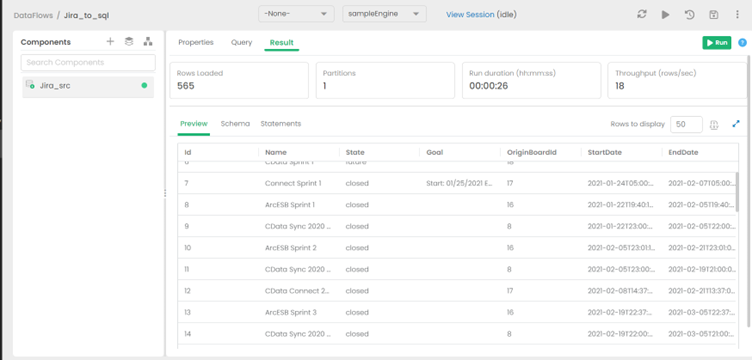

Fill in the Query section of the new source

Select the Table from the Schema option that reflects which data should be pulled from Azure Data Lake Storage data.

View the expected results of your query

The anticipated outcome of the configured query is displayed in the Result tab.

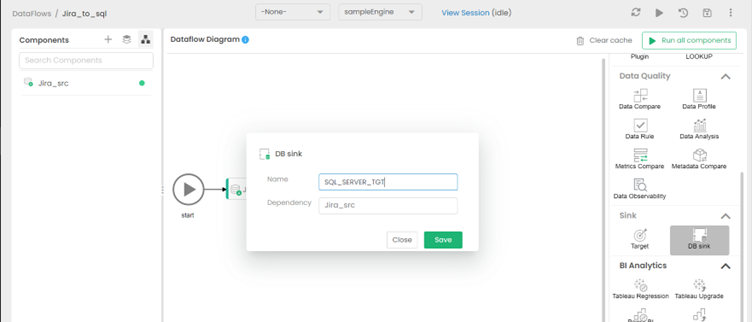

Add the destination to the Dataflow

Select Switch to Diagram, then drag & drop the DB Sink as a target from the right side (under Sink options). Give the sink an appropriate name and save it.

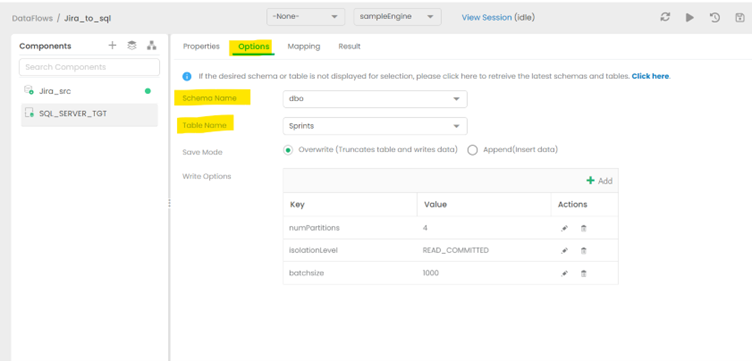

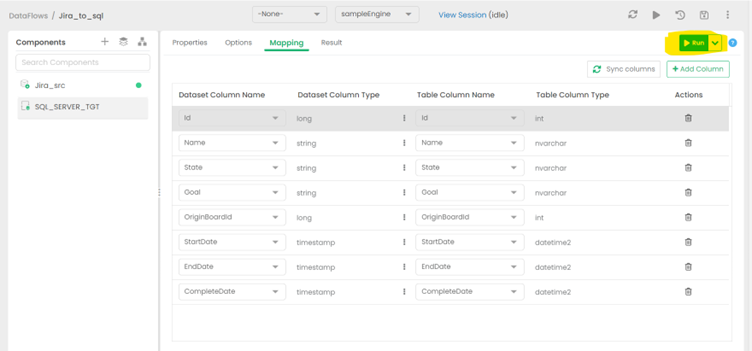

Set the appropriate Schema for the destination

Choose the Schema and table that matches the structure of the source table. For this example, the table on the target side was created to match the Source so that data flow seamlessly. More advanced schema transformation operations are beyond the scope of this article.

Hit the RUN option to begin replication

Running the job will take some time.

View the finished Dataflow

Return to the diagram to see the finished data replication job from Azure Data Lake Storage data to SQL Server.

Get Started Today

Download a free, 30-day trial of the CData JDBC Driver for Azure Data Lake Storage and start building Azure Data Lake Storage-connected applications with ETL Validator. Reach out to our Support Team if you have any questions.